簡介

ASM

ASM當前處於公測階段,歡迎掃碼入群進一步交流:

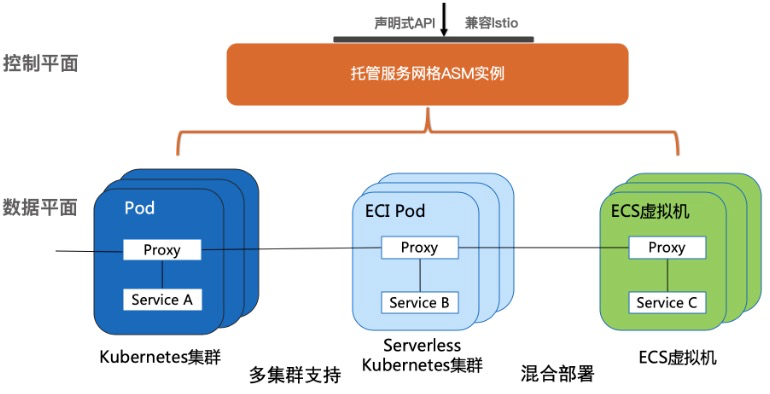

阿里雲服務網格(Alibaba Cloud Service Mesh,簡稱 ASM)提供了一個全託管式的服務網格平臺,兼容於社區 Istio 開源服務網格,用於簡化服務的治理,包括服務調用之間的流量路由與拆分管理、服務間通信的認證安全以及網格可觀測性能力,從而極大地減輕開發與運維的工作負擔。ASM的架構示意圖如下:

ASM 定位於混合雲、多雲、多集群、非容器應用遷移等核心場景中,構建託管式統一的服務網格能力,能夠為阿里雲用戶提供以下功能:

- 一致的管理方式

以一致的方式來管理運行於 ACK 託管 Kubernetes 集群、專有 Kubernetes 集群、ServerlessKubernetes 集群、混合雲或多雲場景下的接入集群上的應用服務,從而提供一致的可觀測性和流量控制 - 統一的流量管理

支持容器或者虛擬機混合環境下統一的流量管理 - 控制平面核心組件託管化

託管控制平面的核心組件,最大限度地降低用戶資源開銷和運維成本

ArgoCD

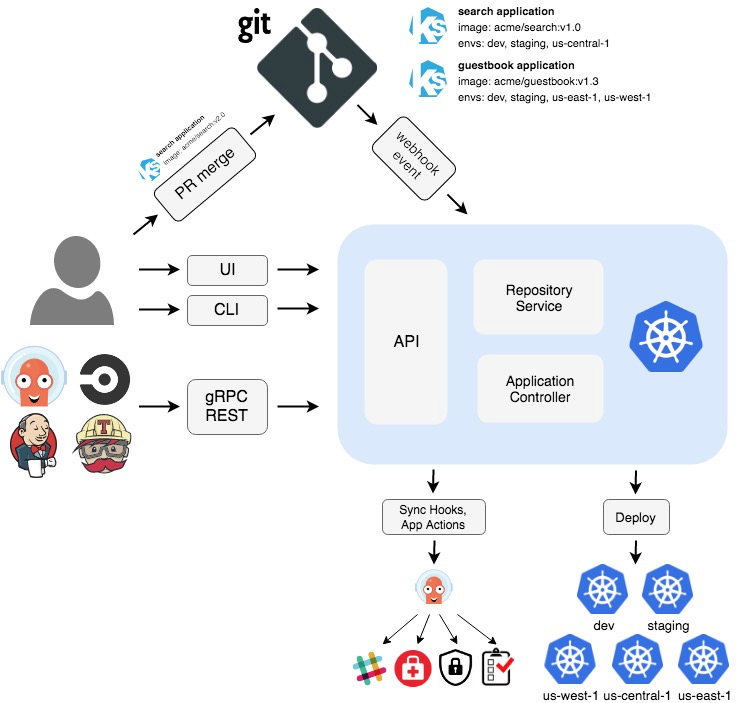

ArgoCD是一個用於持續交付的Kubernetes配置管理工具。Argo CD 遵循 GitOps 模式,監聽當前運行中應用的狀態並與Git Repository中聲明的狀態進行比對,並自動將更新部署到環境中。ArgoCD的架構示意圖如下:

Flagger

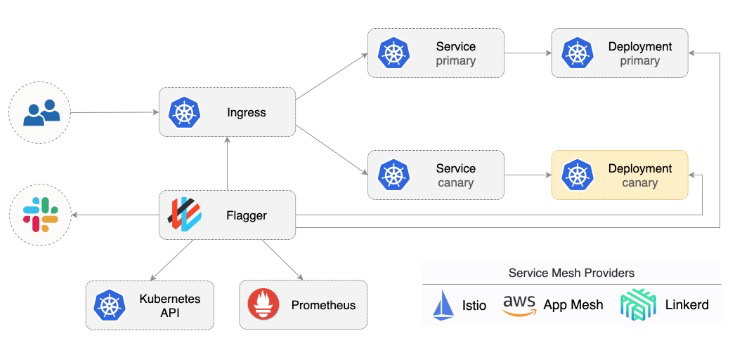

Flagger是一個用於全自動化漸進式完成應用發佈的Kubernetes operator,它通過分析Prometheus收集到的監控指標並通過Istio、App Mesh等流量管理技術或工具完成應用的漸進式發佈。架構示意圖如下:

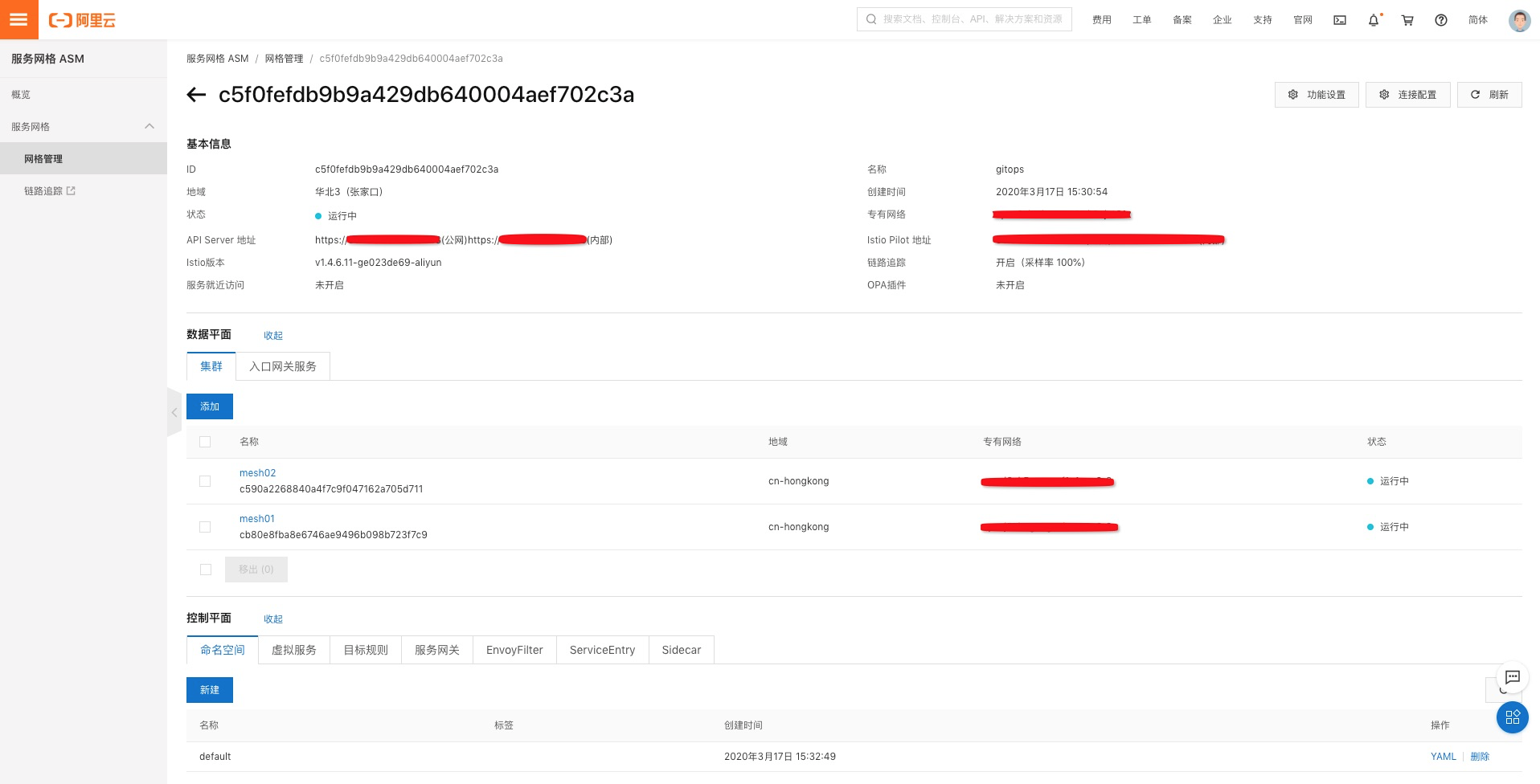

創建ASM實例

參考ASM幫助文檔創建ASM實例並添加mesh01和mesh02 2個ACK集群:

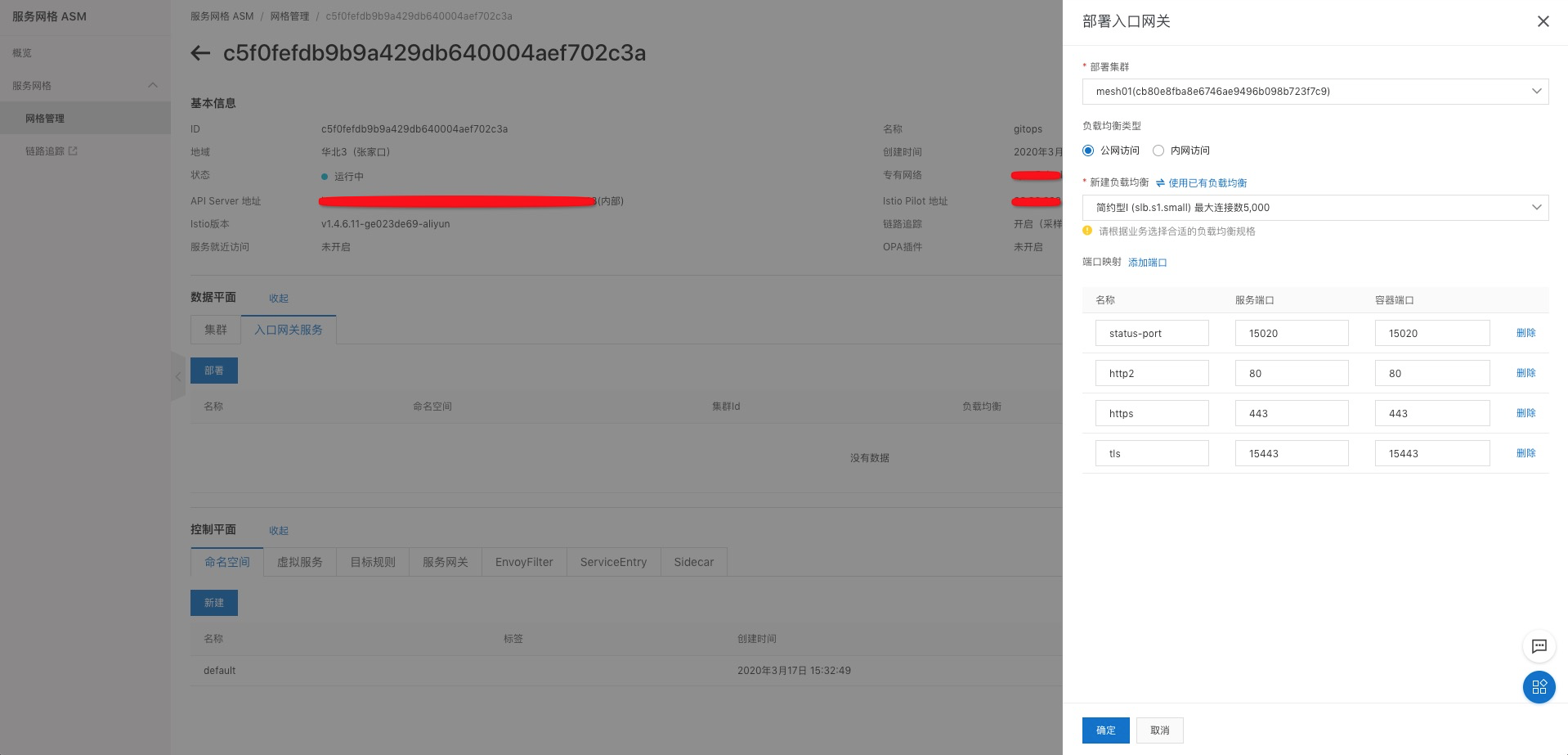

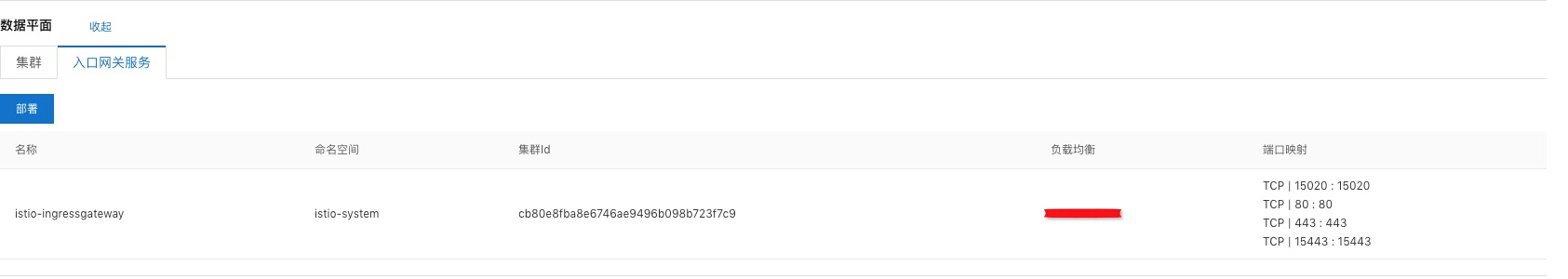

部署入口網關服務到mesh01集群:

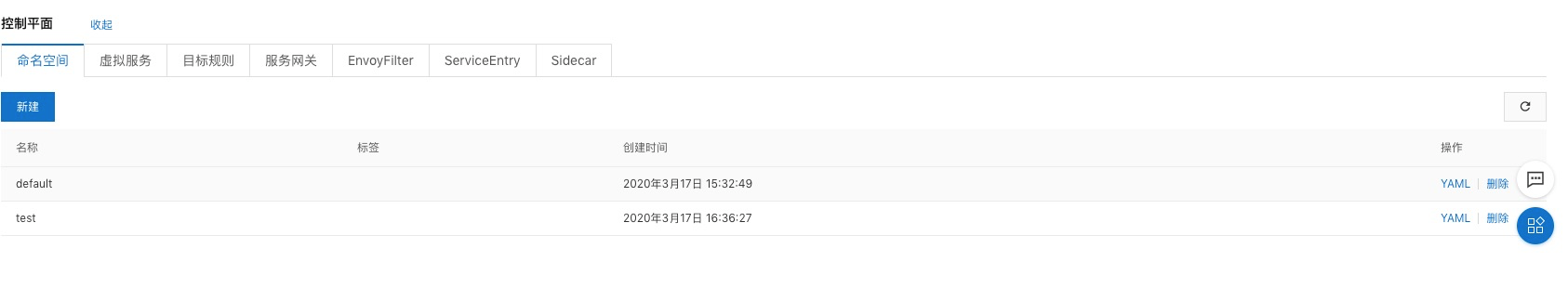

在控制平面創建一個命名空間test:

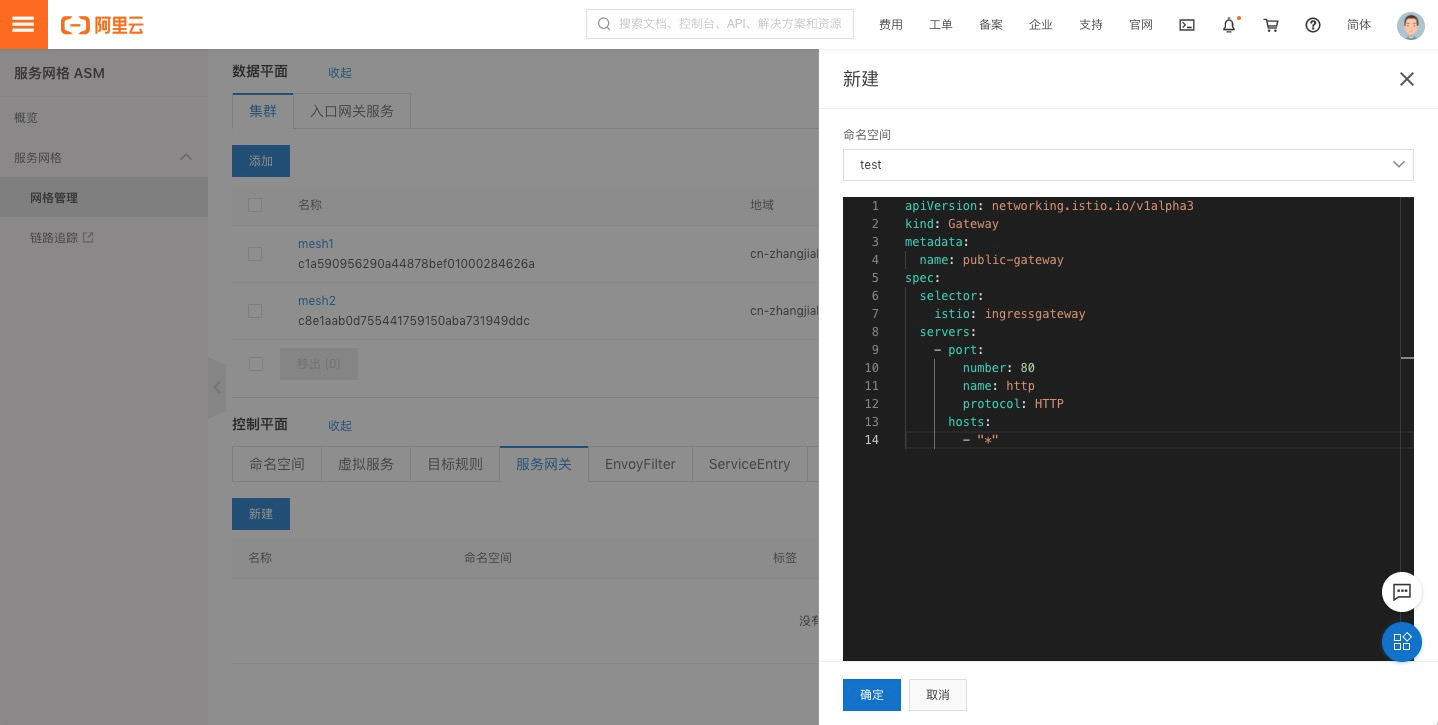

在控制平面創建一個Gateway:

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: public-gateway

namespace: istio-system

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "*"

部署Flagger

分別在mesh1和mesh2 2個ACK集群上按照以下步驟部署Flagger及其依賴組件:

部署Prometheus

$ kubectl apply -k github.com/haoshuwei/argocd-samples/flagger/prometheus/部署Flagger

使用ASM實例的kubeconfig創建secret:

$ kubectl -n istio-system create secret generic istio-kubeconfig --from-file kubeconfig

$ kubectl -n istio-system label secret istio-kubeconfig istio/multiCluster=truehelm安裝Flagger:

$ helm repo add flagger https://flagger.app

$ helm repo update

$ kubectl apply -f https://raw.githubusercontent.com/weaveworks/flagger/master/artifacts/flagger/crd.yaml

$ helm upgrade -i flagger flagger/flagger --namespace=istio-system --set crd.create=false --set meshProvider=istio --set metricsServer=http://prometheus:9090 --set istio.kubeconfig.secretName=istio-kubeconfig --set istio.kubeconfig.key=kubeconfig部署Grafana

$ helm upgrade -i flagger-grafana flagger/grafana --namespace=istio-system --set url=http://prometheus:9090我們可以在ASM實例的控制面板上創建grafana服務的虛擬服務來供外部訪問:

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: grafana

namespace: istio-system

spec:

hosts:

- "grafana.istio.example.com"

gateways:

- public-gateway.istio-system.svc.cluster.local

http:

- route:

- destination:

host: flagger-grafana

訪問服務:

創建命名空間並添加標籤

$ kubectl create ns test

$ kubectl label namespace test istio-injection=enabled部署ArgoCD

我們可以選擇任意一個ACK集群部署ArgoCD

部署ArgoCD Server:

$ kubectl create namespace argocd

$ kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml部署ArgoCD CLI:

$ VERSION=$(curl --silent "https://api.github.com/repos/argoproj/argo-cd/releases/latest" | grep '"tag_name"' | sed -E 's/.*"([^"]+)".*/\1/')

$ curl -sSL -o /usr/local/bin/argocd https://github.com/argoproj/argo-cd/releases/download/$VERSION/argocd-linux-amd64

$ chmod +x /usr/local/bin/argocd獲取和修改登錄密碼:

$ kubectl get pods -n argocd -l app.kubernetes.io/name=argocd-server -o name | cut -d'/' -f 2

$ argocd login ip:port

$ argocd account update-password訪問服務:

完成應用全自動化漸進式發佈的GitOps流程示例

ArgoCD添加集群並部署應用

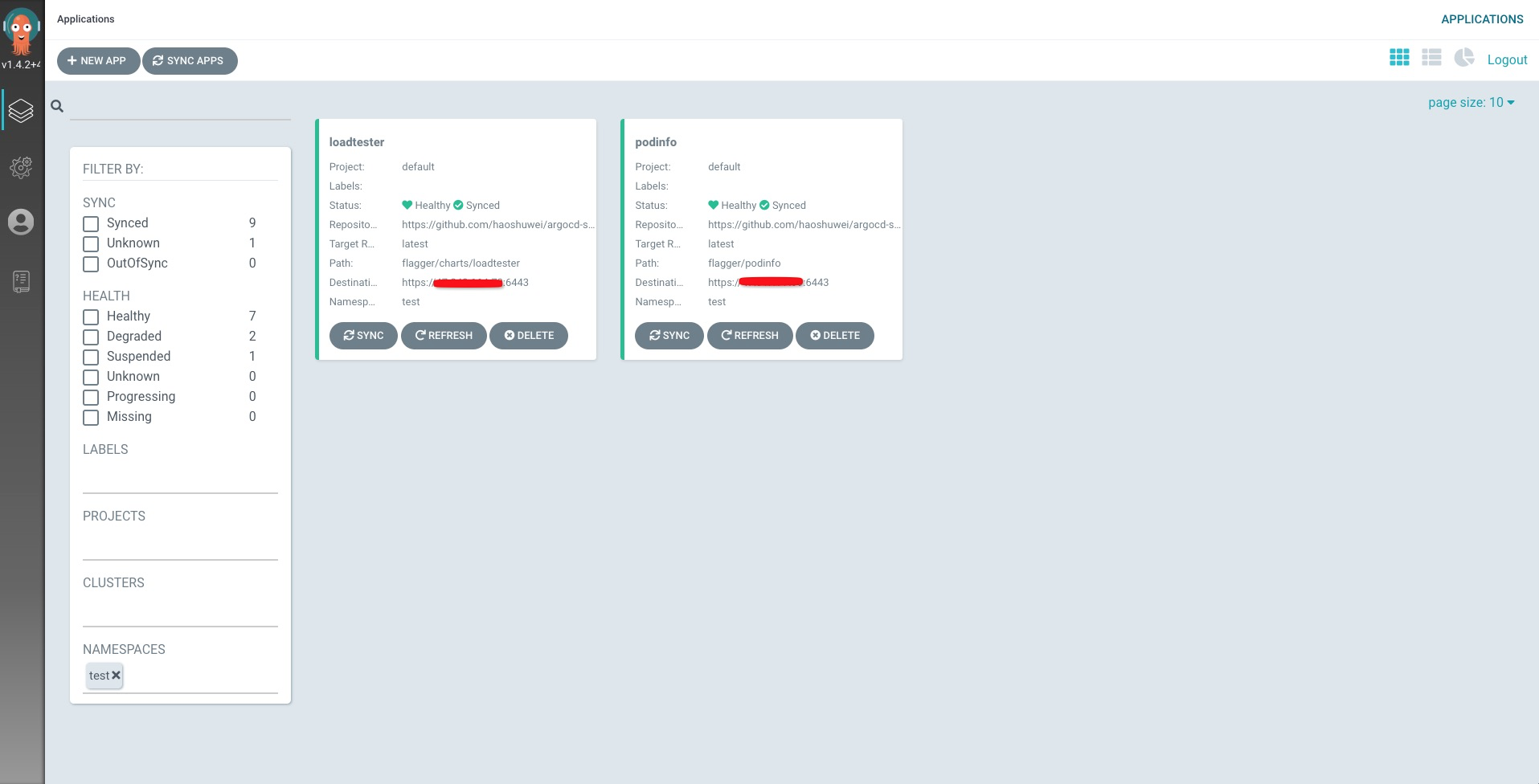

在這個示例中,我們將會把示例應用podinfo部署到mesh02集群,把loadtester測試應用部署到mesh01集群,統一部署在test命名空間下。

添加Git Repository https://github.com/haoshuwei/gitops-demo.git到ArgoCD:

$ argocd repo add https://github.com/haoshuwei/argocd-samples.git--name argocd-samples

repository 'https://github.com/haoshuwei/argocd-samples.git' added

$ argocd repo list

TYPE NAME REPO INSECURE LFS CREDS STATUS MESSAGE

git argocd-samples https://github.com/haoshuwei/argocd-samples.git false false false Successful使用kubeconfig添加mesh01和mesh02 2個集群到ArgoCD:

$ argocd cluster add mesh01 --kubeconfig=mesh01

INFO[0000] ServiceAccount "argocd-manager" created in namespace "kube-system"

INFO[0000] ClusterRole "argocd-manager-role" created

INFO[0000] ClusterRoleBinding "argocd-manager-role-binding" created

$ argocd cluster add mesh02 --kubeconfig=mesh02

INFO[0000] ServiceAccount "argocd-manager" created in namespace "kube-system"

INFO[0000] ClusterRole "argocd-manager-role" created

INFO[0000] ClusterRoleBinding "argocd-manager-role-binding" created

$ argocd cluster list |grep mesh

https://xx.xx.xxx.xx:6443 mesh02 1.16+ Successful

https://xx.xxx.xxx.xx:6443 mesh01 1.16+ Successful部署應用podinfo到mesh02集群:

$ argocd app create --project default --name podinfo --repo https://github.com/haoshuwei/argocd-samples.git --path flagger/podinfo --dest-server https://xx.xx.xxx.xx:6443 --dest-namespace test --revision latest --sync-policy automated以上命令行做的事情是創建一個應用podinfo,這個應用的Git Repository源是https://github.com/haoshuwei/gitops-demo.git 項目flagger/podinfo子目錄下的文件,分支為latest,應用部署到https://xx.xx.xxx.xx:6443集群的test命名空間下,應用的同步策略是automated。

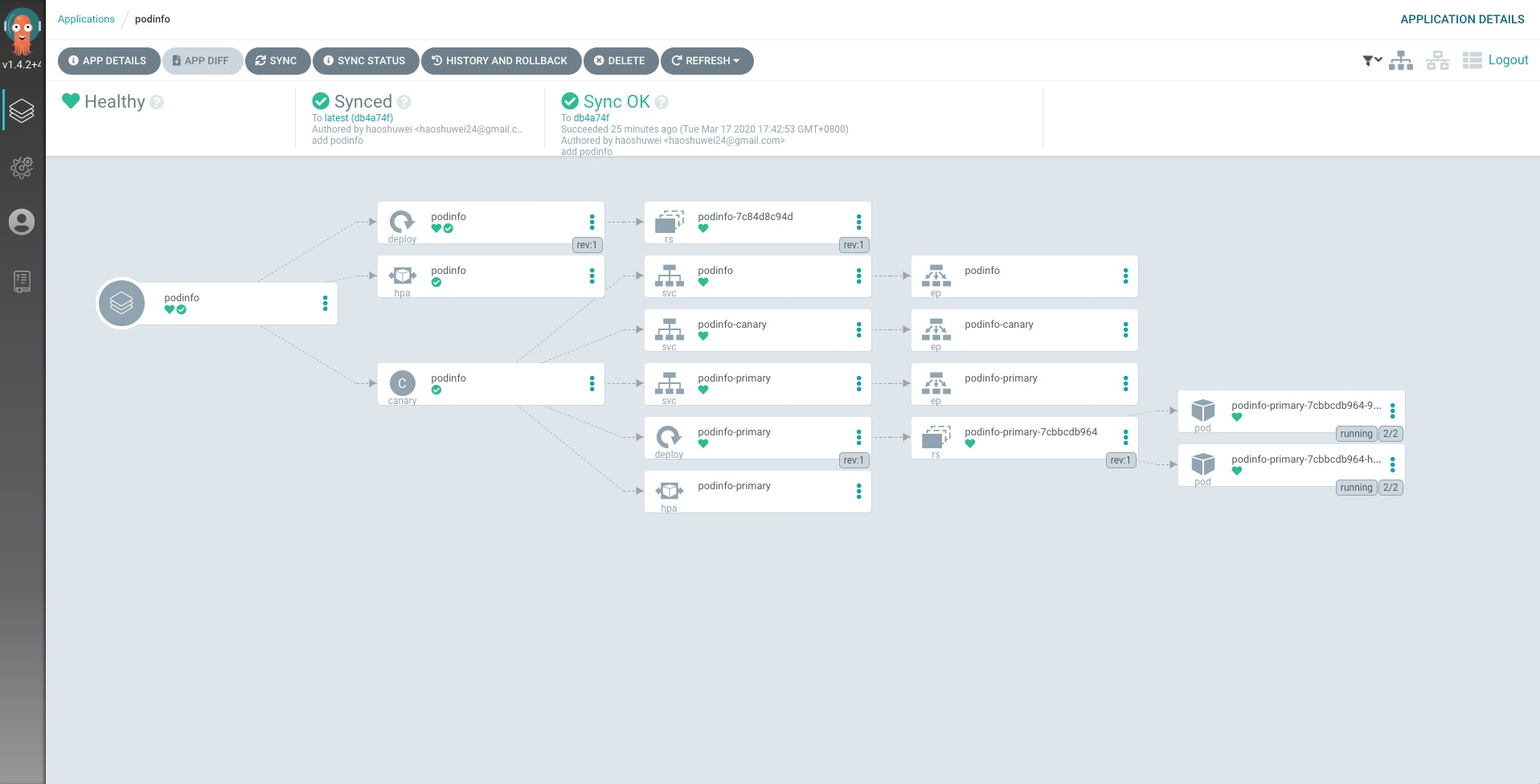

flagger/podinfo 子目錄下包括4個編排文件deployment.yaml hpa.yaml kustomization.yaml和canary.yaml,其中canary.yaml文件就是我們這個示例中完成應用全自動化漸進式金絲雀發佈的核心編排文件,內容如下:

apiVersion: flagger.app/v1beta1

kind: Canary

metadata:

name: podinfo

namespace: test

spec:

targetRef:

apiVersion: apps/v1

kind: Deployment

name: podinfo

progressDeadlineSeconds: 60

autoscalerRef:

apiVersion: autoscaling/v2beta1

kind: HorizontalPodAutoscaler

name: podinfo

service:

port: 9898

gateways:

- public-gateway.istio-system.svc.cluster.local

hosts:

- app.istio.example.com

trafficPolicy:

tls:

# use ISTIO_MUTUAL when mTLS is enabled

mode: DISABLE

analysis:

interval: 30s

threshold: 10

maxWeight: 50

stepWeight: 5

metrics:

- name: request-success-rate

threshold: 99

interval: 30s

- name: request-duration

threshold: 500

interval: 30s

webhooks:

- name: load-test

url: http://loadtester.test/

timeout: 5s

metadata:

cmd: "hey -z 1m -q 10 -c 2 http://podinfo-canary.test:9898/"canary.yaml文件中定義了以下幾個關鍵部分

spec:

targetRef:

apiVersion: apps/v1

kind: Deployment

name: podinfo

progressDeadlineSeconds: 60

autoscalerRef:

apiVersion: autoscaling/v2beta1

kind: HorizontalPodAutoscaler

name: podinfo以上字段表示這個canary資源會監聽和引用名為podinfo的deployments資源和HorizontalPodAutoscaler資源。

service:

port: 9898

gateways:

- public-gateway.istio-system.svc.cluster.local

hosts:

- app.istio.example.com

trafficPolicy:

tls:

# use ISTIO_MUTUAL when mTLS is enabled

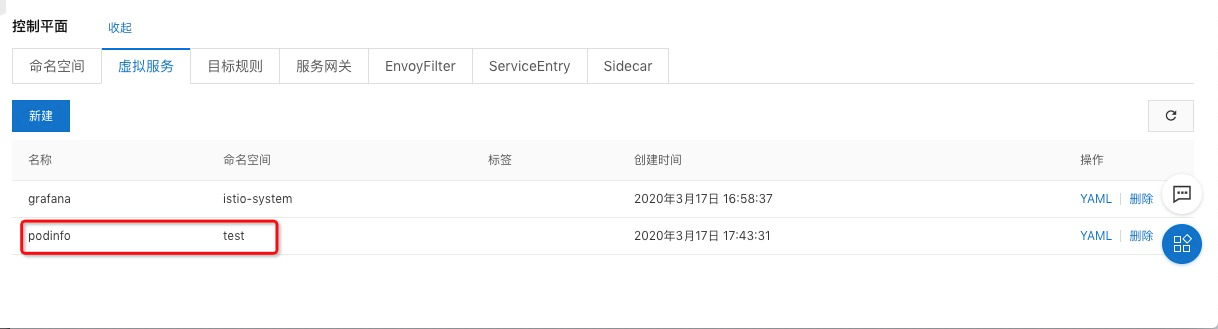

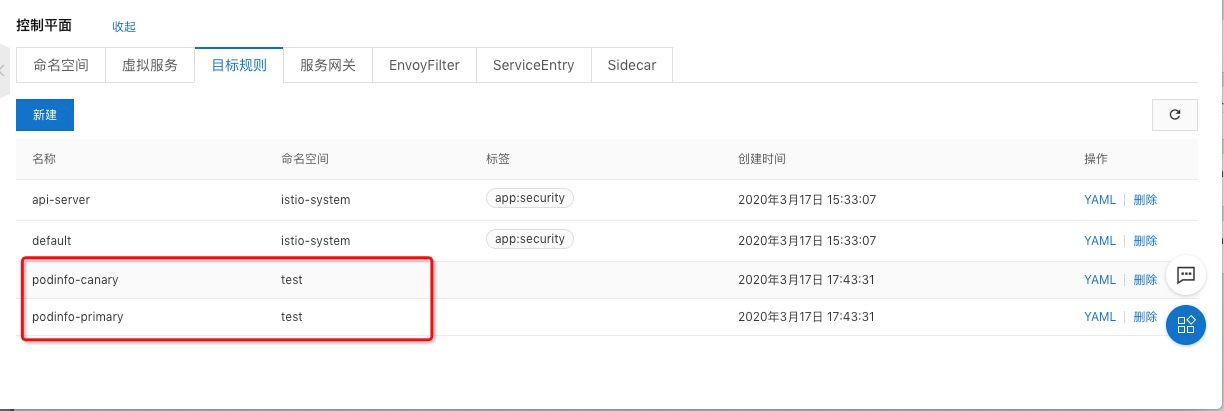

mode: DISABLE以上字段表示canary資源會在ASM控制面板自動為podinfo應用創建虛擬服務,名字也是podinfo。

analysis:

interval: 30s

threshold: 5

maxWeight: 50

stepWeight: 5

metrics:

- name: request-success-rate

threshold: 99

interval: 30s

- name: request-duration

threshold: 500

interval: 30s

webhooks:

- name: load-test

url: http://loadtester.test/

timeout: 5s

metadata:

cmd: "hey -z 1m -q 10 -c 2 http://podinfo-canary.test:9898/"以上字段表示我們在發佈新版本podinfo應用時,要先對新版本應用做一些測試和分析, interval: 30s, 每隔30s測試一次threshold: 5, 失敗次數超過5次則認為失敗maxWeight: 50, 流量權重最大可以切換到50stepWeight: 5, 每次增加權重為5metrics中定義了2種指標,request-success-rate 請求成功率不能小於99request-duration RT均值不能大於500ms

用來生成測試任務的則定義在webhooks字段。

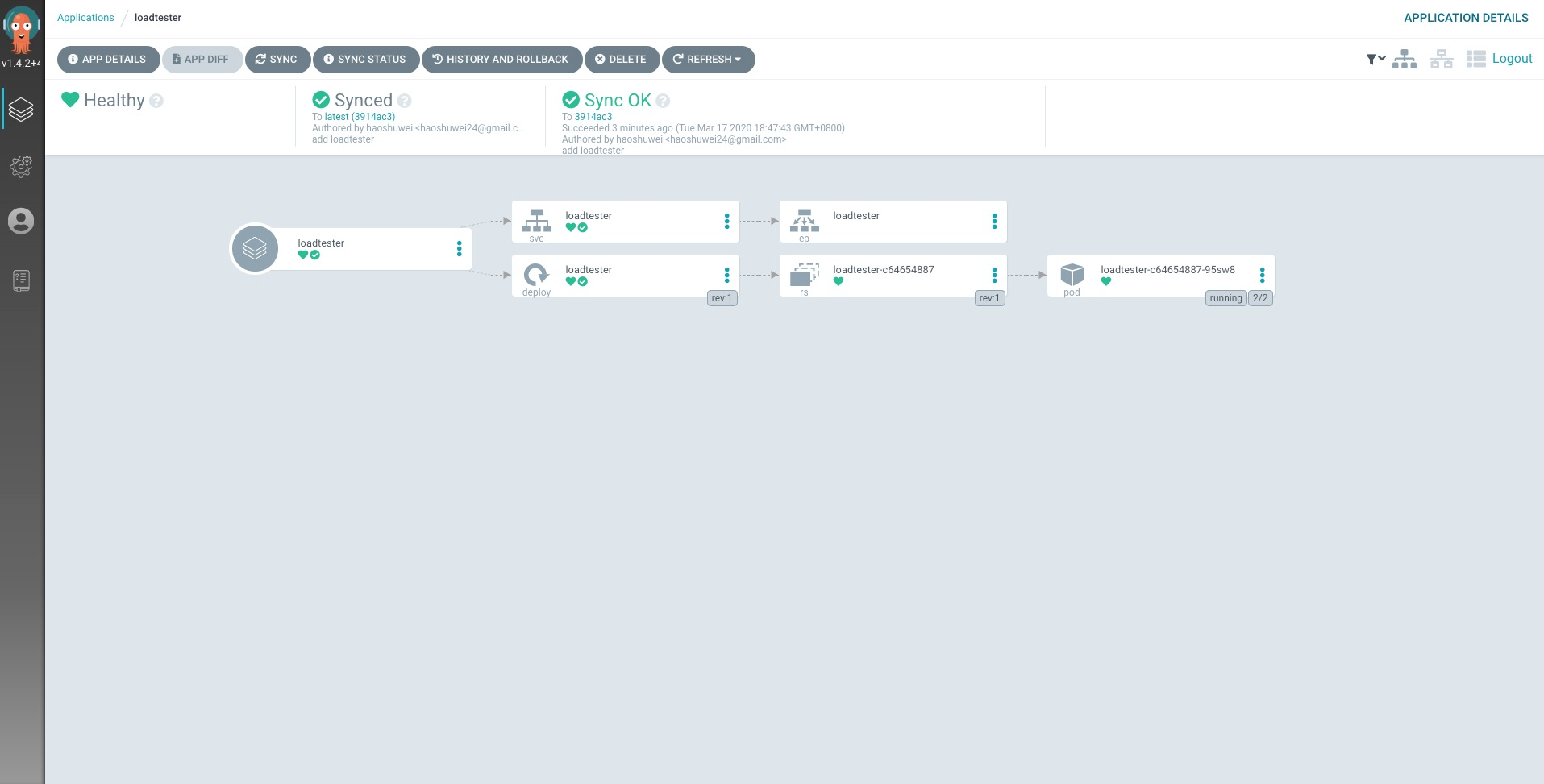

部署測試應用loadtester到mesh01集群:

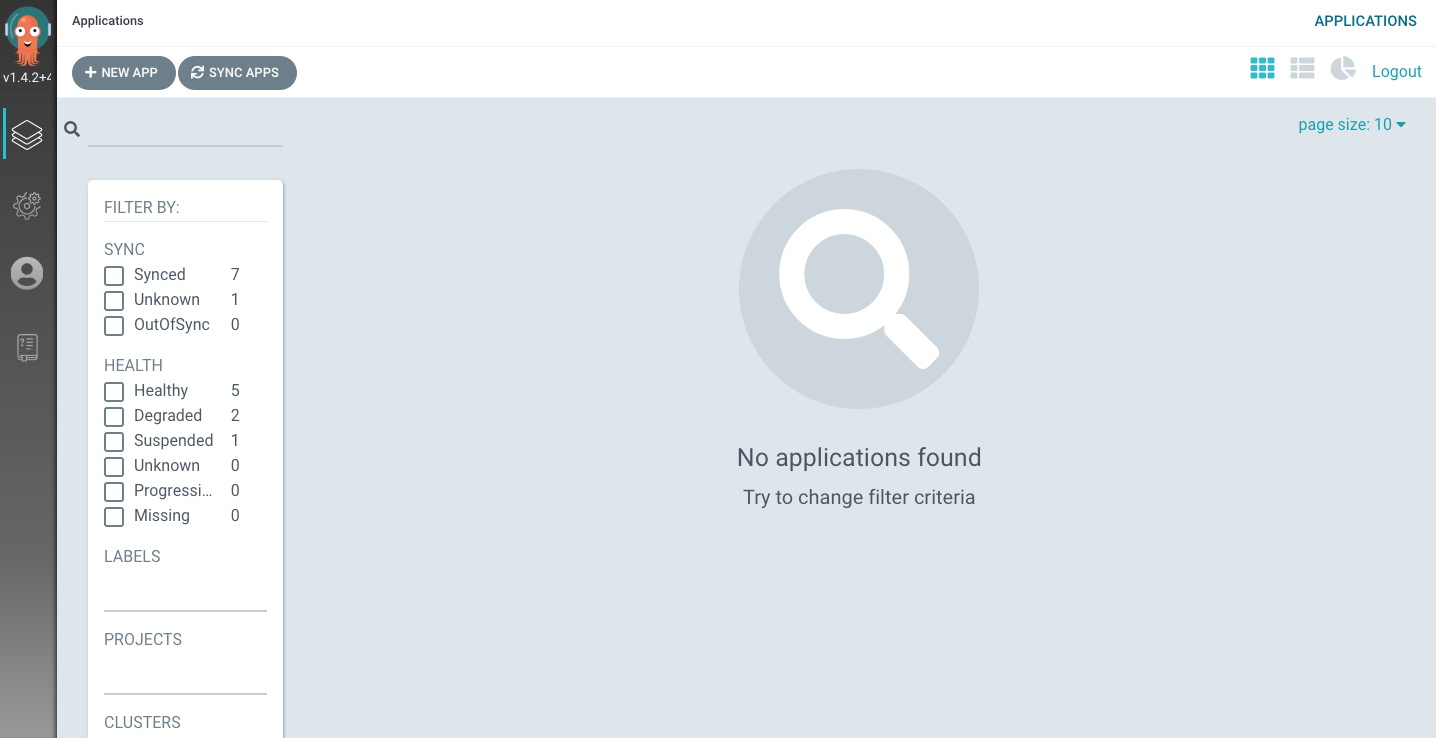

$ argocd app create --project default --name loadtester --repo https://github.com/haoshuwei/argocd-samples.git --path flagger/charts/loadtester --dest-server https://xx.xxx.xxx.xx:6443 --dest-namespace test --revision latest --sync-policy automated以上應用創建完成後,由於我們設置的sync策略為自動部署,所以應用會自動部署到mesh01和mesh02集群中,我們可以在ArgoCD頁面上查看應用詳細信息:

podinfo詳情:

loadtester詳情:

在ASM的控制面板我們可以查看flagger動態創建的虛擬服務和目標規則:

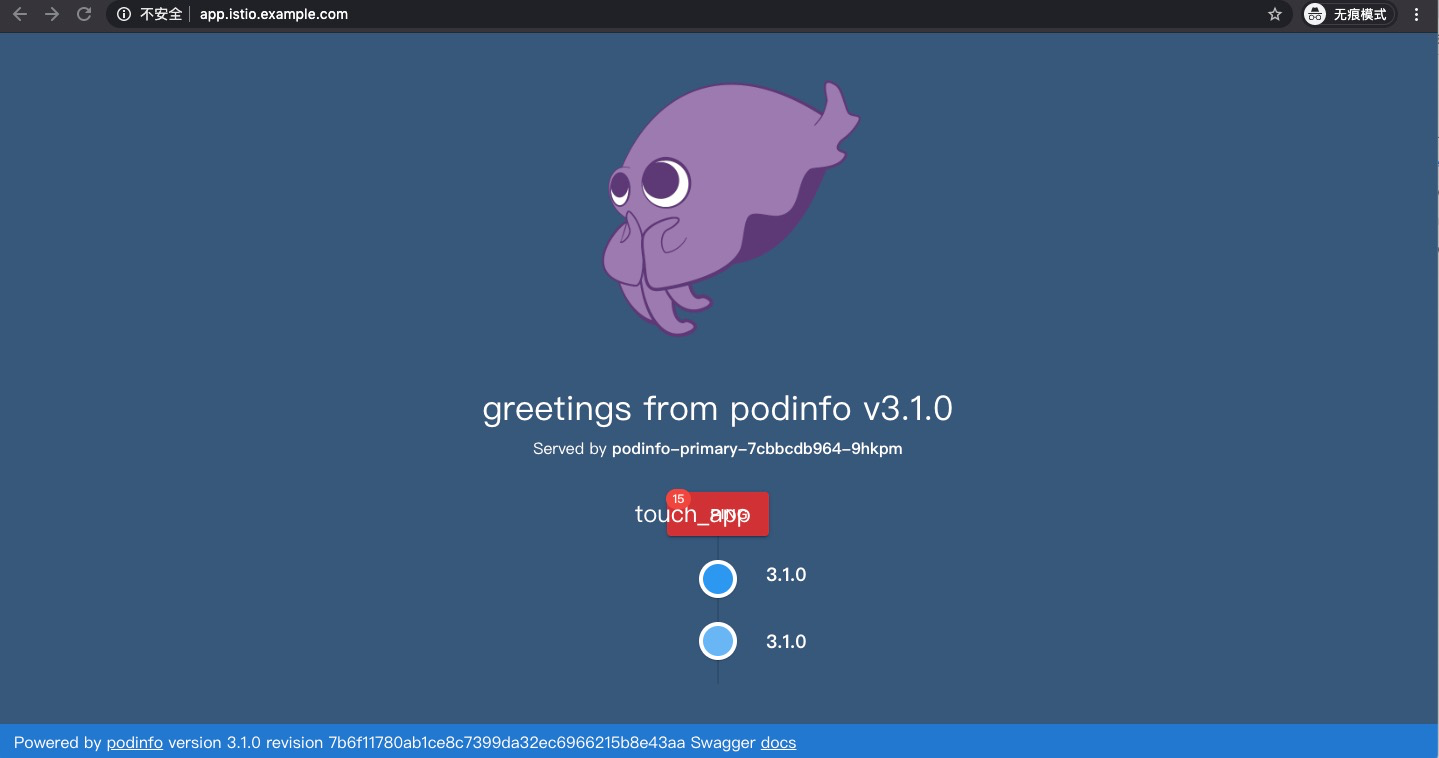

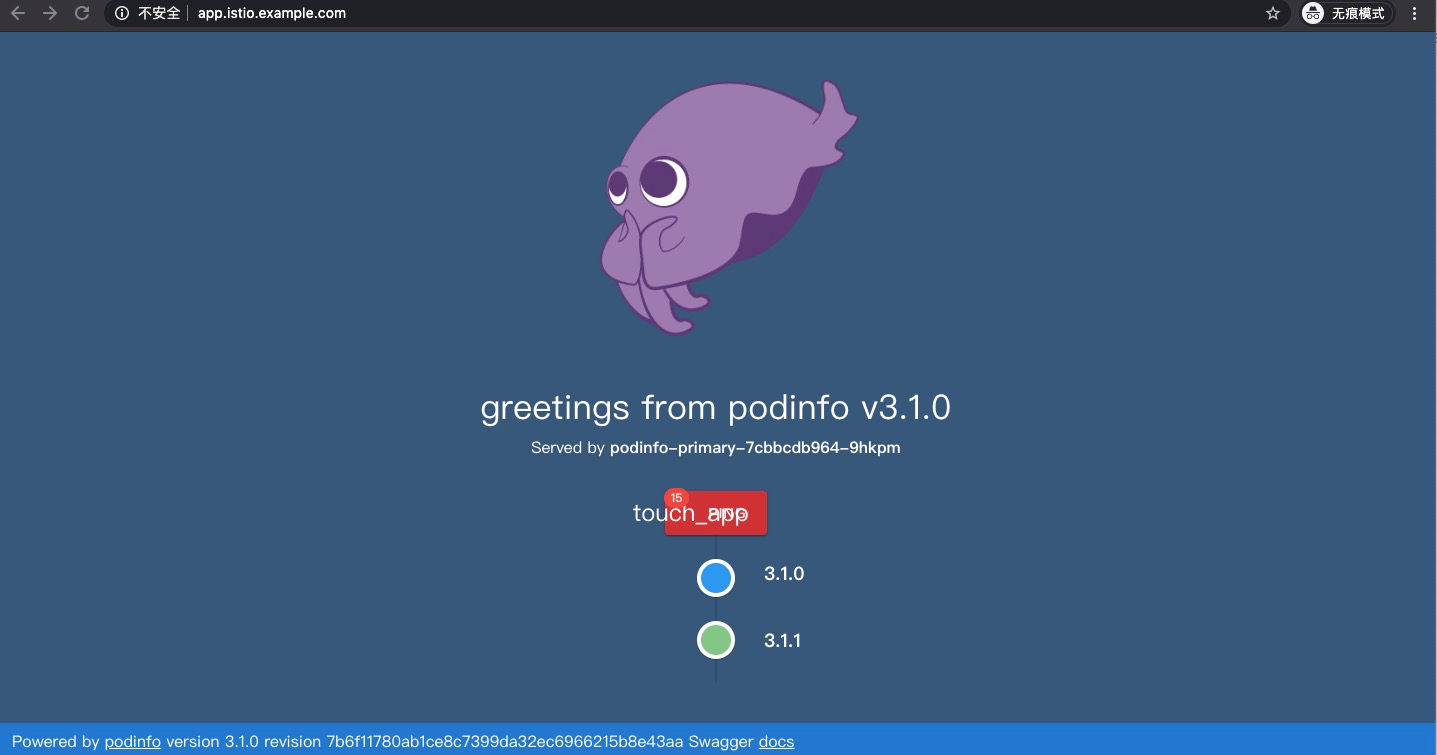

訪問服務:

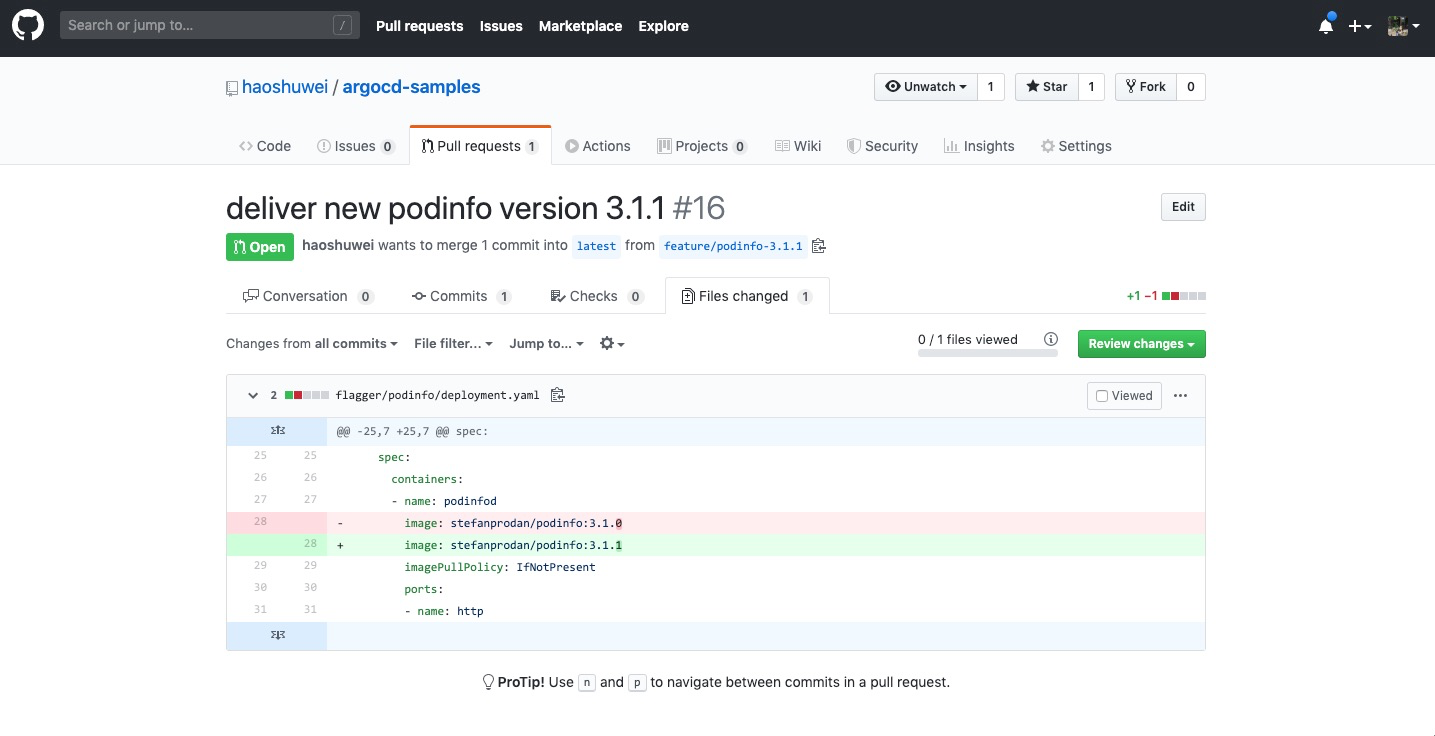

GitOps自動發佈

新建分支修改應用容器鏡像版本提交,並創建指向latest分支的PullRequest:

管理員審批並merge pr後,latest分支有新代碼進入,ArgoCD會自動把更新同步集群環境中,flagger檢測到podinfo應用有新版本變更,則開始自動化漸進式地發佈新版本應用,通過以下命令可以查看應用發佈進度:

$ watch kubectl get canaries --all-namespaces

Every 2.0s: kubectl get canaries --all-namespaces Tue Mar 17 19:04:20 2020

NAMESPACE NAME STATUS WEIGHT LASTTRANSITIONTIME

test podinfo Progressing 10 2020-03-17T11:04:01Z訪問應用可以看到有流量切換到新版本上:

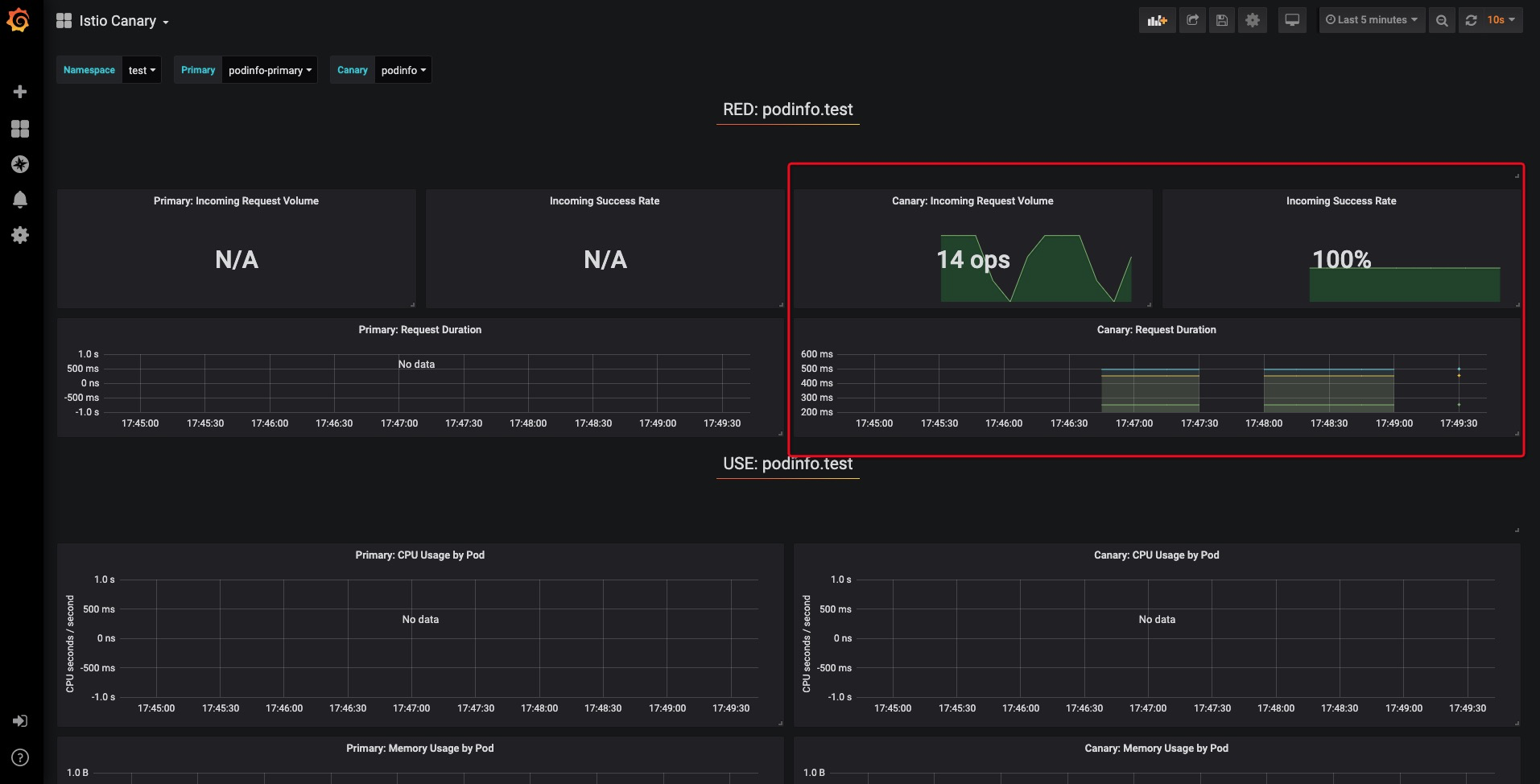

同時我們也可以在grafana面板中查看到新版本測試指標情況:

整個發佈過程的messages輸出如下:

"msg":"New revision detected! Scaling up podinfo.test","canary":"podinfo.test"

"msg":"Starting canary analysis for podinfo.test","canary":"podinfo.test"

"msg":"Advance podinfo.test canary weight 5","canary":"podinfo.test"

"msg":"Advance podinfo.test canary weight 10","canary":"podinfo.test"

"msg":"Advance podinfo.test canary weight 15","canary":"podinfo.test"

"msg":"Advance podinfo.test canary weight 20","canary":"podinfo.test"

"msg":"Advance podinfo.test canary weight 25","canary":"podinfo.test"

"msg":"Advance podinfo.test canary weight 30","canary":"podinfo.test"

"msg":"Advance podinfo.test canary weight 35","canary":"podinfo.test"

"msg":"Advance podinfo.test canary weight 40","canary":"podinfo.test"

"msg":"Advance podinfo.test canary weight 45","canary":"podinfo.test"

"msg":"Advance podinfo.test canary weight 50","canary":"podinfo.test"

"msg":"Copying podinfo.test template spec to podinfo-primary.test","canary":"podinfo.test"

"msg":"Halt advancement podinfo-primary.test waiting for rollout to finish: 3 of 4 updated replicas are available","canary":"podinfo.test"

"msg":"Routing all traffic to primary","canary":"podinfo.test"

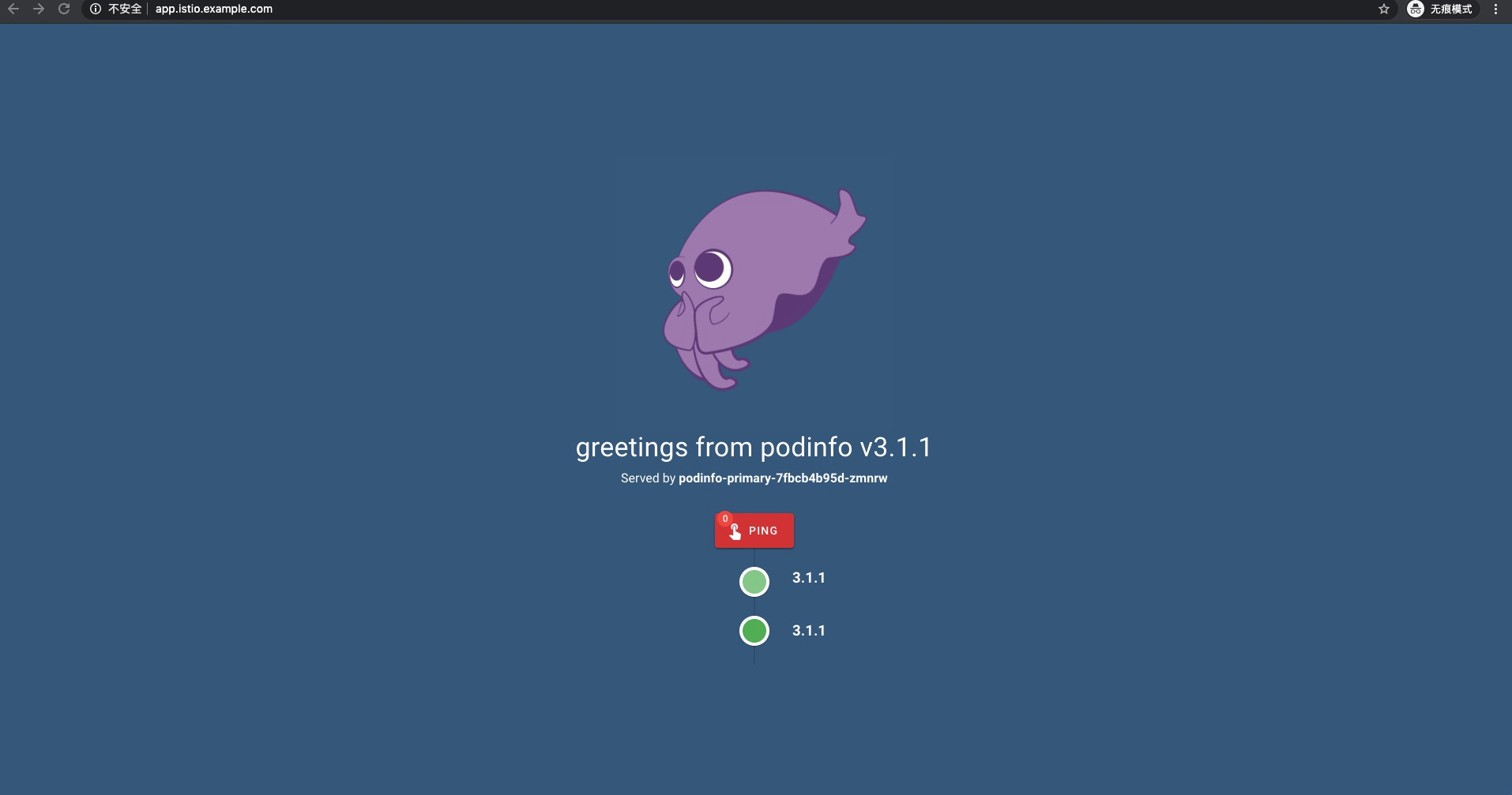

"msg":"Promotion completed! Scaling down podinfo.test","canary":"podinfo.test"應用發佈完畢後,所有流量切換到新版本上:

若新版本應用測試指標不達標,則應用自動回滾到初始穩定狀態:

"msg":"New revision detected! Scaling up podinfo.test","canary":"podinfo.test"

"msg":"Starting canary analysis for podinfo.test","canary":"podinfo.test"

"msg":"Advance podinfo.test canary weight 10","canary":"podinfo.test"

"msg":"Halt advancement no values found for istio metric request-success-rate probably podinfo.test is not receiving traffic","canary":"podinfo.test"

"msg":"Halt advancement no values found for istio metric request-duration probably podinfo.test is not receiving traffic","canary":"podinfo.test"

"msg":"Halt advancement no values found for istio metric request-duration probably podinfo.test is not receiving traffic","canary":"podinfo.test"

"msg":"Halt advancement no values found for istio metric request-duration probably podinfo.test is not receiving traffic","canary":"podinfo.test"

"msg":"Halt advancement no values found for istio metric request-duration probably podinfo.test is not receiving traffic","canary":"podinfo.test"

"msg":"Synced test/podinfo"

"msg":"Rolling back podinfo.test failed checks threshold reached 5","canary":"podinfo.test"

"msg":"Canary failed! Scaling down podinfo.test","canary":"podinfo.test"參考資料

https://medium.com/google-cloud/automated-canary-deployments-with-flagger-and-istio-ac747827f9d1

https://docs.flagger.app/

https://docs.flagger.app/dev/upgrade-guide#istio-telemetry-v2

https://github.com/weaveworks/flagger

[](https://help.aliyun.com/document_detail/149550.html)