嚐鮮阿里雲容器服務Kubernetes 1.16,擁抱GPU新姿勢-v4

簡介

TensorFLow是深度學習和機器學習最流行的開源框架,它最初是由Google研究團隊開發的並致力於解決深度神經網絡的機器學習研究,從2015年開源到現在得到了廣泛的應用。特別是Tensorboard這一利器,對於數據科學家有效的工作也是非常有效的利器。

Jupyter notebook是強大的數據分析工具,它能夠幫助快速開發並且實現機器學習代碼的共享,是數據科學團隊用來做數據實驗和組內合作的利器,也是機器學習初學者入門這一個領域的好起點。

利用Jupyter開發TensorFlow也是許多數據科學家的首選,但是如何能夠快速從零搭建一套這樣的環境,並且配置GPU的使用,同時支持最新的TensorFlow版本, 對於數據科學家來說既是複雜的,同時也是浪費精力的。

在Kubernetes集群上,您可以快速的部署一套完整Jupyter Notebook環境,進行模型開發。這個方案唯一的問題在於這裡的GPU資源是獨享,造成較大的浪費。數據科學家使用notebook實驗的時候GPU顯存需求量並不大,如果可以能夠多人共享同一個GPU可以降低模型開發的成本。

而阿里雲容器服務團隊推出了GPU共享方案,可以在模型開發和模型推理的場景下大大提升GPU資源的利用率,同時也可以保障GPU資源的隔離。

獨享GPU的處理辦法

為集群添加一個新的gpu節點

- 創建容器服務集群

- 添加GPU節點作為worker

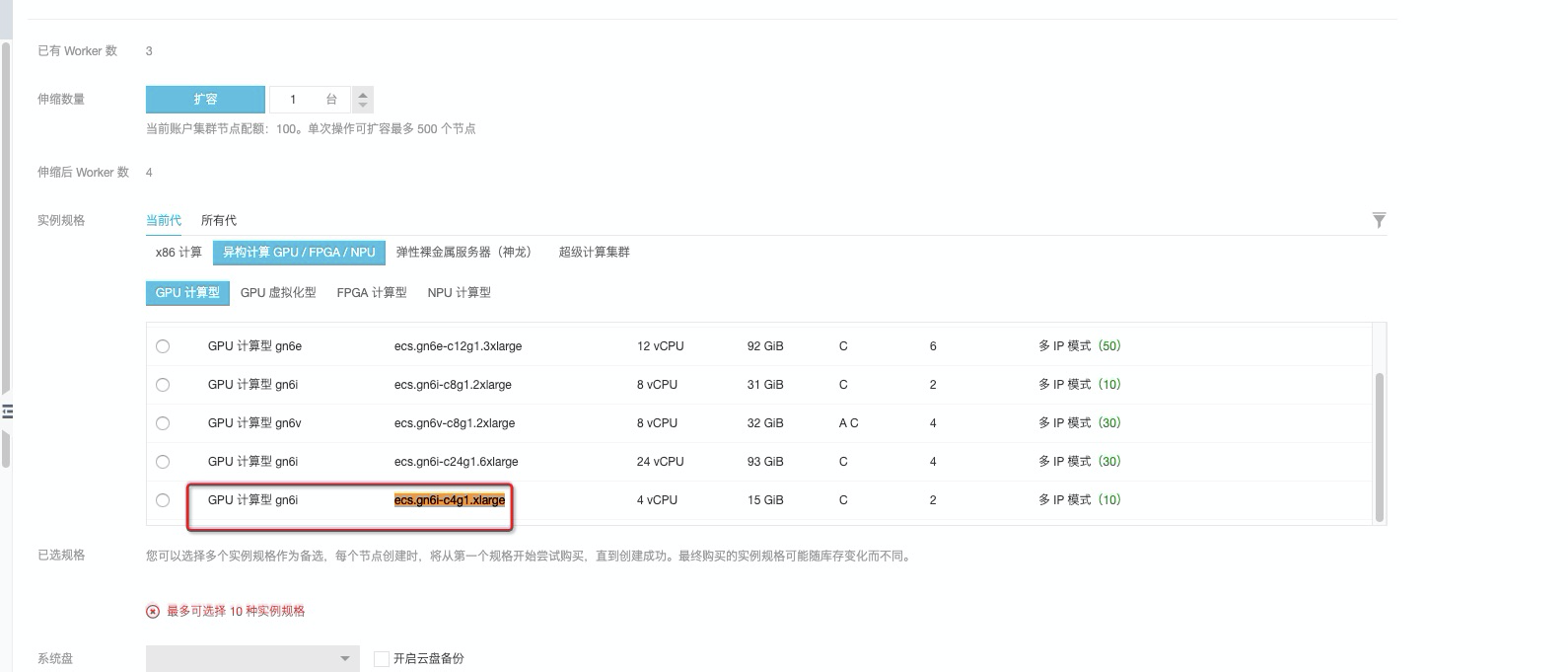

本例中我們選擇GPU機器規格“ecs.gn6i-c4g1.xlarge”

添加後結果如下"cn-zhangjiakou.192.168.3.189"

jumper(⎈ |zjk-gpu:default)➜ ~ kubectl get node -L cgpu,workload_type

NAME STATUS ROLES AGE VERSION CGPU WORKLOAD_TYPE

cn-zhangjiakou.192.168.0.138 Ready master 11d v1.16.6-aliyun.1

cn-zhangjiakou.192.168.1.112 Ready master 11d v1.16.6-aliyun.1

cn-zhangjiakou.192.168.1.113 Ready <none> 11d v1.16.6-aliyun.1

cn-zhangjiakou.192.168.3.115 Ready master 11d v1.16.6-aliyun.1

cn-zhangjiakou.192.168.3.189 Ready <none> 5m52s v1.16.6-aliyun.1部署應用

通過命令 kubectl apply -f gpu_deployment.yaml 來部署應用,gpu_deployment.yaml文件內容如下

---

# Define the tensorflow deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: tf-notebook-gpu

labels:

app: tf-notebook-gpu

spec:

replicas: 2

selector: # define how the deployment finds the pods it mangages

matchLabels:

app: tf-notebook-gpu

template: # define the pods specifications

metadata:

labels:

app: tf-notebook-gpu

spec:

containers:

- name: tf-notebook

image: tensorflow/tensorflow:1.4.1-gpu-py3

resources:

limits:

nvidia.com/gpu: 1

requests:

nvidia.com/gpu: 1

ports:

- containerPort: 8888

env:

- name: PASSWORD

value: mypassw0rd

# Define the tensorflow service

---

apiVersion: v1

kind: Service

metadata:

name: tf-notebook-gpu

spec:

ports:

- port: 80

targetPort: 8888

name: jupyter

selector:

app: tf-notebook-gpu

type: LoadBalancer因為只有一個GPU節點,而上面的yaml文件中申請了兩個Pod,我們看到如下pod的調度情況,

可以看到第二個pod的狀態是pending,原因是無對應資源來進行調度,也即是說只能一個Pod“獨佔”該節點的GPU資源。

jumper(⎈ |zjk-gpu:default)➜ ~ kubectl get pod

NAME READY STATUS RESTARTS AGE

tf-notebook-2-7b4d68d8f7-mb852 1/1 Running 0 15h

tf-notebook-3-86c48d4c7d-flz7m 1/1 Running 0 15h

tf-notebook-7cf4575d78-sxmfl 1/1 Running 0 23h

tf-notebook-gpu-695cb6cf89-dsjmv 1/1 Running 0 6s

tf-notebook-gpu-695cb6cf89-mwm98 0/1 Pending 0 6s

jumper(⎈ |zjk-gpu:default)➜ ~ kubectl describe pod tf-notebook-gpu-695cb6cf89-mwm98

Name: tf-notebook-gpu-695cb6cf89-mwm98

Namespace: default

Priority: 0

Node: <none>

Labels: app=tf-notebook-gpu

pod-template-hash=695cb6cf89

Annotations: kubernetes.io/psp: ack.privileged

Status: Pending

IP:

IPs: <none>

Controlled By: ReplicaSet/tf-notebook-gpu-695cb6cf89

Containers:

tf-notebook:

Image: tensorflow/tensorflow:1.4.1-gpu-py3

Port: 8888/TCP

Host Port: 0/TCP

Limits:

nvidia.com/gpu: 1

Requests:

nvidia.com/gpu: 1

Environment:

PASSWORD: mypassw0rd

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-wpwn8 (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

default-token-wpwn8:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-wpwn8

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling <unknown> default-scheduler 0/6 nodes are available: 6 Insufficient nvidia.com/gpu.

Warning FailedScheduling <unknown> default-scheduler 0/6 nodes are available: 6 Insufficient nvidia.com/gpu.真實的程序

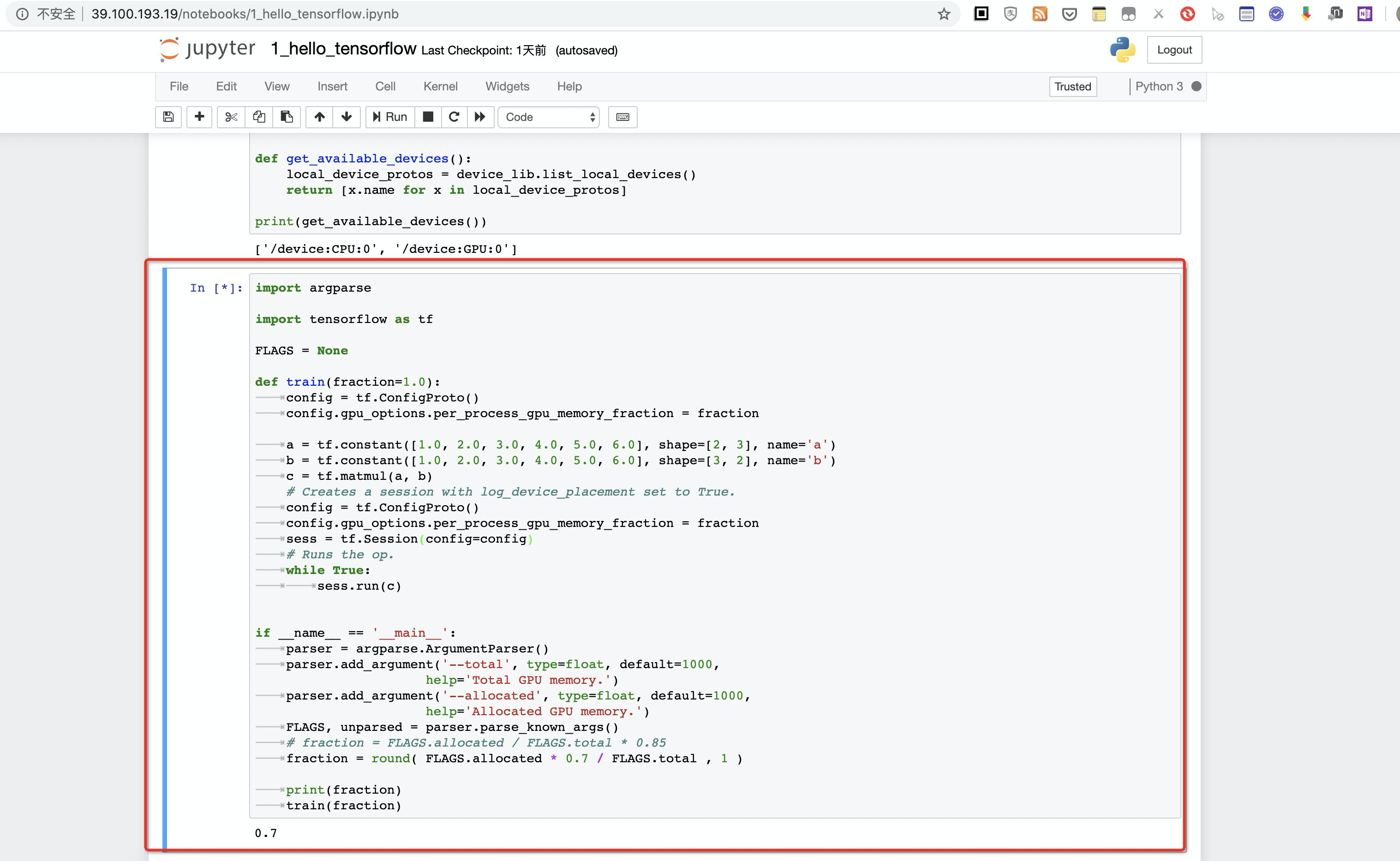

在jupyter裡執行下面的程序

import argparse

import tensorflow as tf

FLAGS = None

def train(fraction=1.0):

config = tf.ConfigProto()

config.gpu_options.per_process_gpu_memory_fraction = fraction

a = tf.constant([1.0, 2.0, 3.0, 4.0, 5.0, 6.0], shape=[2, 3], name='a')

b = tf.constant([1.0, 2.0, 3.0, 4.0, 5.0, 6.0], shape=[3, 2], name='b')

c = tf.matmul(a, b)

# Creates a session with log_device_placement set to True.

config = tf.ConfigProto()

config.gpu_options.per_process_gpu_memory_fraction = fraction

sess = tf.Session(config=config)

# Runs the op.

while True:

sess.run(c)

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--total', type=float, default=1000,

help='Total GPU memory.')

parser.add_argument('--allocated', type=float, default=1000,

help='Allocated GPU memory.')

FLAGS, unparsed = parser.parse_known_args()

# fraction = FLAGS.allocated / FLAGS.total * 0.85

fraction = round( FLAGS.allocated * 0.7 / FLAGS.total , 1 )

print(fraction) # fraction 默認值為0.7,該程序最多使用總資源的70%

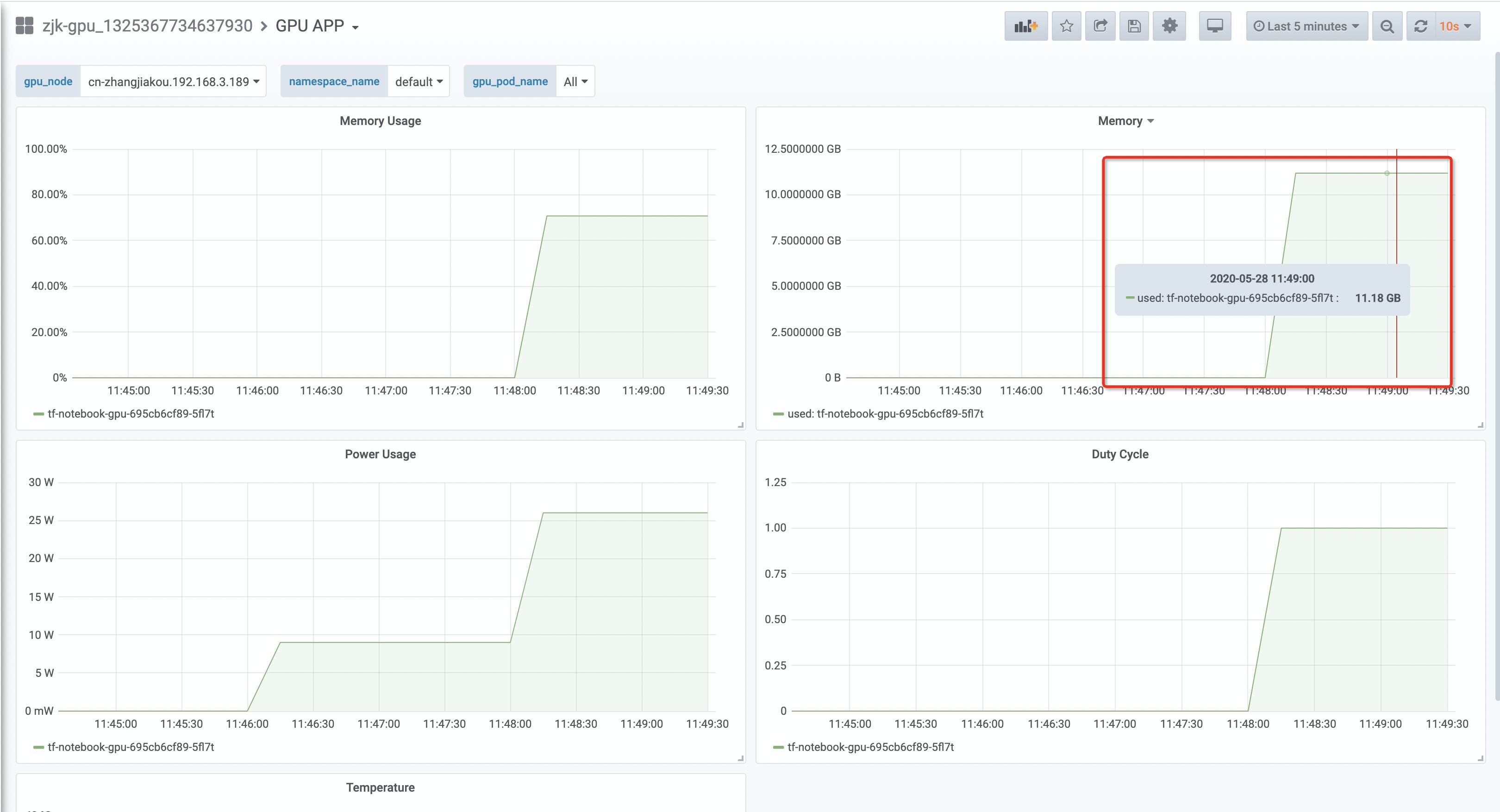

train(fraction)通過託管版本Prometheus可以看到,在運行時其使用了整機資源的70%,

獨享GPU方案的問題

綜上所述,獨享GPU調度方案存在的問題是在推理、教學等對GPU用量不大的場景中不能將更多的Pod調度在一起,完成GPU的共享

為了解決這些問題我們引入了GPU共享的方案,以便更好的利用GPU資源,提供更密集的部署能力、更高的GPU使用率、完整的隔離能力。

GPU共享方案

環境準備

前提條件

| 配置 | 支持版本 |

|---|---|

| Kubernetes | 1.16.06;專屬集群-master節點需要在客戶的VPC內 |

| Helm版本 | 3.0及以上版本 |

| Nvidia驅動版本 | 418.87.01及以上版本 |

| Docker版本 | 19.03.5 |

| 操作系統 | CentOS 7.6、CentOS 7.7、Ubuntu 16.04和Ubuntu 18.04 |

| 支持顯卡 | Telsa P4、Telsa P100、 Telsa T4和Telsa v100(16GB) |

創建集群

添加GPU節點

本文中使用的GPU節點規格為 ecs.gn6i-c4g1.xlarge

設置節點為GPU共享節點--為GPU節點打標

- 登錄容器服務管理控制檯。

- 在控制檯左側導航欄中,選擇集群 > 節點

- 在節點列表頁面,選擇目標集群並單擊頁面右上角標籤管理。

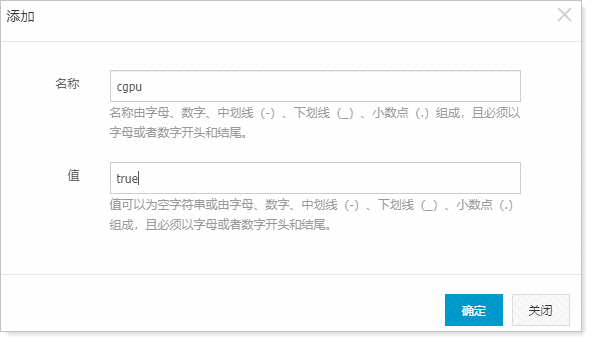

- 在標籤管理頁面,批量選擇節點,然後單擊添加標籤。

- 在彈出的添加對話框中,填寫標籤名稱和值。

注意 請確保名稱設置為cgpu,值設置為true。

- 單擊確定。

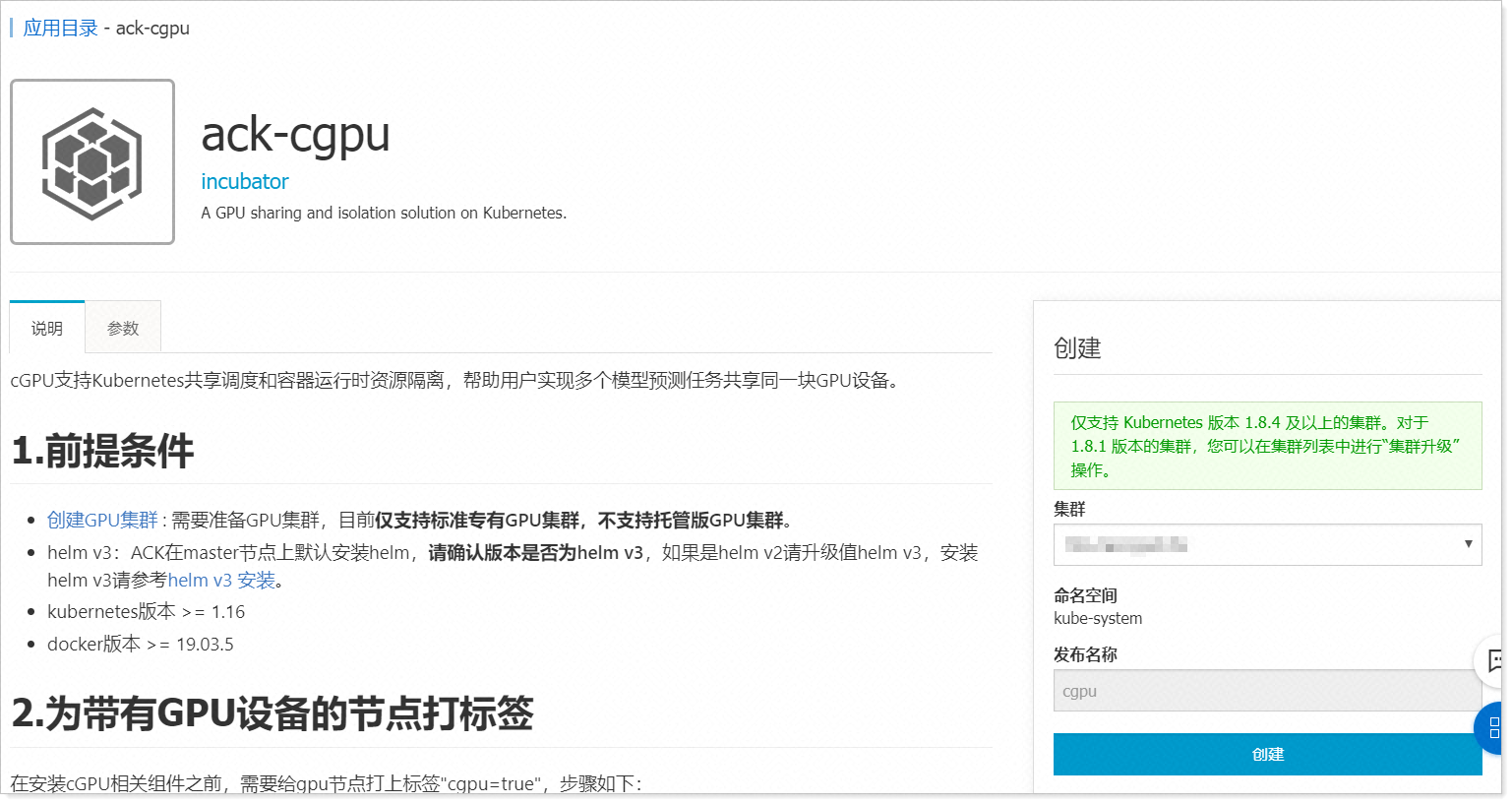

為集群安裝CGPU組件

- 登錄容器服務管理控制檯。

- 在控制檯左側導航欄中,選擇市場 > 應用目錄。

- 在應用目錄頁面,選中並單擊ack-cgpu。

- 在應用目錄-ack-cgpu頁面右側的創建面板中,選中目標集群,然後單擊創建。您無需設置命名空間和發佈名稱,系統顯示默認值。

您可以執行命令helm get manifest cgpu -n kube-system | kubectl get -f -查看cGPU組件是否安裝成功。當出現以下命令詳情時,說明cGPU組件安裝成功。

# helm get manifest cgpu -n kube-system | kubectl get -f -

NAME SECRETS AGE

serviceaccount/gpushare-device-plugin 1 39s

serviceaccount/gpushare-schd-extender 1 39s

NAME AGE

clusterrole.rbac.authorization.k8s.io/gpushare-device-plugin 39s

clusterrole.rbac.authorization.k8s.io/gpushare-schd-extender 39s

NAME AGE

clusterrolebinding.rbac.authorization.k8s.io/gpushare-device-plugin 39s

clusterrolebinding.rbac.authorization.k8s.io/gpushare-schd-extender 39s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/gpushare-schd-extender NodePort 10.6.13.125 <none> 12345:32766/TCP 39s

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/cgpu-installer 4 4 4 4 4 cgpu=true 39s

daemonset.apps/device-plugin-evict-ds 4 4 4 4 4 cgpu=true 39s

daemonset.apps/device-plugin-recover-ds 0 0 0 0 0 cgpu=false 39s

daemonset.apps/gpushare-device-plugin-ds 4 4 4 4 4 cgpu=true 39s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/gpushare-schd-extender 1/1 1 1 38s

NAME COMPLETIONS DURATION AGE

job.batch/gpushare-installer 3/1 of 3 3s 38s安裝arena查看資源情況

安裝arena

@ linux

wget http://kubeflow.oss-cn-beijing.aliyuncs.com/arena-installer-0.4.0-829b0e9-linux-amd64.tar.gz

tar -xzvf arena-installer-0.4.0-829b0e9-linux-amd64.tar.gz

sh ./arena-installer/install.sh@ mac

wget http://kubeflow.oss-cn-beijing.aliyuncs.com/arena-installer-0.4.0-829b0e9-darwin-amd64.tar.gz

tar -xzvf arena-installer-0.4.0-829b0e9-darwin-amd64.tar.gz

sh ./arena-installer/install.sh查看資源情況

jumper(⎈ |zjk-gpu:default)➜ ~ arena top node

NAME IPADDRESS ROLE STATUS GPU(Total) GPU(Allocated) GPU(Shareable)

cn-zhangjiakou.192.168.0.138 192.168.0.138 master ready 0 0 No

cn-zhangjiakou.192.168.1.112 192.168.1.112 master ready 0 0 No

cn-zhangjiakou.192.168.1.113 192.168.1.113 <none> ready 0 0 No

cn-zhangjiakou.192.168.3.115 192.168.3.115 master ready 0 0 No

cn-zhangjiakou.192.168.3.184 192.168.3.184 <none> ready 1 0 Yes

------------------------------------------------------------------------------------------------

Allocated/Total GPUs In Cluster:

0/1 (0%)

jumper(⎈ |zjk-gpu:default)➜ ~ arena top node -s

NAME IPADDRESS GPU0(Allocated/Total)

cn-zhangjiakou.192.168.3.184 192.168.3.184 0/14

---------------------------------------------------------------------

Allocated/Total GPU Memory In GPUShare Node:

0/14 (GiB) (0%)如上所示

節點cn-zhangjiakou.192.168.3.184 有1個GPU資源, 設置了 GPU(Shareable)--即在節點上打標籤cgpu=true,其上有14個顯存資源

運行TensorFLow的GPU實驗環境

將如下文件存儲為 mem_deployment.yaml,通過kubectl執行 kubectl apply -f mem_deployment.yaml部署應用

---

# Define the tensorflow deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: tf-notebook

labels:

app: tf-notebook

spec:

replicas: 1

selector: # define how the deployment finds the pods it mangages

matchLabels:

app: tf-notebook

template: # define the pods specifications

metadata:

labels:

app: tf-notebook

spec:

containers:

- name: tf-notebook

image: tensorflow/tensorflow:1.4.1-gpu-py3

resources:

limits:

aliyun.com/gpu-mem: 4

requests:

aliyun.com/gpu-mem: 4

ports:

- containerPort: 8888

env:

- name: PASSWORD

value: mypassw0rd

# Define the tensorflow service

---

apiVersion: v1

kind: Service

metadata:

name: tf-notebook

spec:

ports:

- port: 80

targetPort: 8888

name: jupyter

selector:

app: tf-notebook

type: LoadBalancerjumper(⎈ |zjk-gpu:default)➜ ~ kubectl apply -f mem_deployment.yaml

deployment.apps/tf-notebook created

service/tf-notebook created

jumper(⎈ |zjk-gpu:default)➜ ~ kubectl get svc tf-notebook

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

tf-notebook LoadBalancer 172.21.2.50 39.100.193.19 80:32285/TCP 78m訪問http://${EXTERNAL-IP}/ 來訪問目標

Deployment配置:

- nvidia.com/gpu 指定調用nvidia gpu的數量

- type=LoadBalancer 指定使用阿里雲的負載均衡訪問內部服務和負載均衡

- 環境變量 PASSWORD 指定了訪問Jupyter服務的密碼,您可以按照您的需要修改,默認“mypassw0rd”

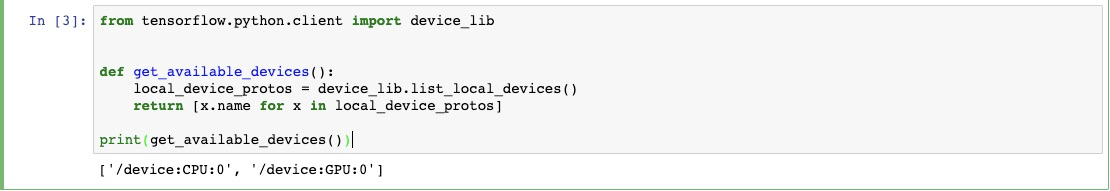

現在要驗證這個Jupyter實例可以使用GPU,可以在運行下面的程序。它將列出Tensorflow可用的所有設備。

from tensorflow.python.client import device_lib

def get_available_devices():

local_device_protos = device_lib.list_local_devices()

return [x.name for x in local_device_protos]

print(get_available_devices())可以看到如下輸出,資源位GPU:0

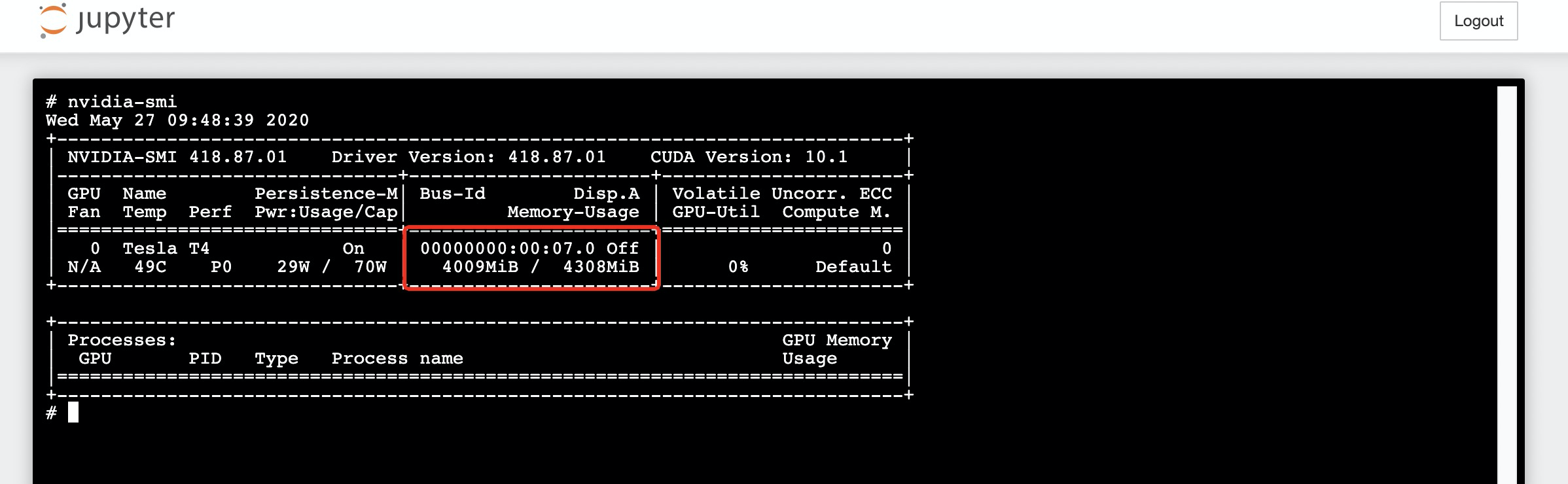

在首頁創建新的terminal

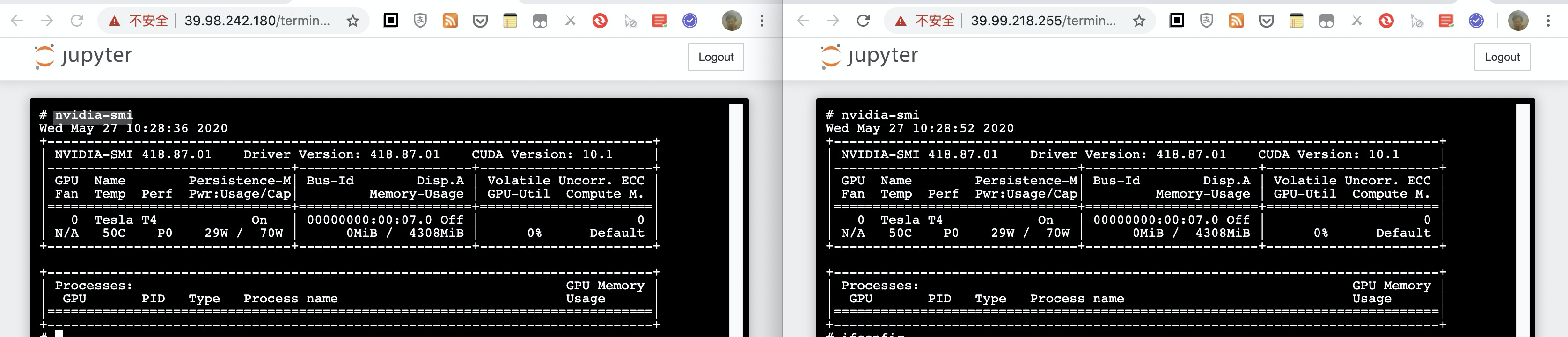

執行 nvidia-smi

驗證GPU資源的共享

以上部分可以看出新的資源“aliyun.com/gpu-mem: 4”可以正常的申請的GPU資源,並運行對應的GPU任務,下面來看GPU資源共享的情況。

資源使用情況查看

首先,現有資源使用情況如下 arena top node -s -d

jumper(⎈ |zjk-gpu:default)➜ ~ arena top node -s -d

NAME: cn-zhangjiakou.192.168.3.184

IPADDRESS: 192.168.3.184

NAME NAMESPACE GPU0(Allocated)

tf-notebook-2-7b4d68d8f7-wxlff default 4

tf-notebook-3-86c48d4c7d-lk9h8 default 4

tf-notebook-7cf4575d78-9gxzd default 4

Allocated : 12 (85%)

Total : 14

--------------------------------------------------------------------------------------------------------------------------------------

Allocated/Total GPU Memory In GPUShare Node:

12/14 (GiB) (85%)部署更多的服務和副本

為了每個notebook能夠有自己的入口,我們申請三個服務,指向三個pod,yaml文件如下

_ps: mem_deployment-2.yaml、mem_deployment-3.yaml與mem_deployment.yaml內容幾乎一致,只是把不同的svc指向不同的pod_

mem_deployment-2.yaml

---

# Define the tensorflow deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: tf-notebook-2

labels:

app: tf-notebook-2

spec:

replicas: 1

selector: # define how the deployment finds the pods it mangages

matchLabels:

app: tf-notebook-2

template: # define the pods specifications

metadata:

labels:

app: tf-notebook-2

spec:

containers:

- name: tf-notebook

image: tensorflow/tensorflow:1.4.1-gpu-py3

resources:

limits:

aliyun.com/gpu-mem: 4

requests:

aliyun.com/gpu-mem: 4

ports:

- containerPort: 8888

env:

- name: PASSWORD

value: mypassw0rd

# Define the tensorflow service

---

apiVersion: v1

kind: Service

metadata:

name: tf-notebook-2

spec:

ports:

- port: 80

targetPort: 8888

name: jupyter

selector:

app: tf-notebook-2

type: LoadBalancermem_deployment-3.yaml

---

# Define the tensorflow deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: tf-notebook-3

labels:

app: tf-notebook-3

spec:

replicas: 1

selector: # define how the deployment finds the pods it mangages

matchLabels:

app: tf-notebook-3

template: # define the pods specifications

metadata:

labels:

app: tf-notebook-3

spec:

containers:

- name: tf-notebook

image: tensorflow/tensorflow:1.4.1-gpu-py3

resources:

limits:

aliyun.com/gpu-mem: 4

requests:

aliyun.com/gpu-mem: 4

ports:

- containerPort: 8888

env:

- name: PASSWORD

value: mypassw0rd

# Define the tensorflow service

---

apiVersion: v1

kind: Service

metadata:

name: tf-notebook-3

spec:

ports:

- port: 80

targetPort: 8888

name: jupyter

selector:

app: tf-notebook-3

type: LoadBalancer應用兩個yaml文件,加上之前部署的pod和服務共計在集群上部署3個Pod和3個服務

jumper(⎈ |zjk-gpu:default)➜ ~ kubectl apply -f mem_deployment-2.yaml

deployment.apps/tf-notebook-2 created

service/tf-notebook-2 created

jumper(⎈ |zjk-gpu:default)➜ ~ kubectl apply -f mem_deployment-3.yaml

deployment.apps/tf-notebook-3 created

service/tf-notebook-3 created

jumper(⎈ |zjk-gpu:default)➜ ~ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 172.21.0.1 <none> 443/TCP 11d

tf-notebook LoadBalancer 172.21.2.50 39.100.193.19 80:32285/TCP 7h48m

tf-notebook-2 LoadBalancer 172.21.1.46 39.99.218.255 80:30659/TCP 8m53s

tf-notebook-3 LoadBalancer 172.21.8.56 39.98.242.180 80:31274/TCP 7s

jumper(⎈ |zjk-gpu:default)➜ ~ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

tf-notebook-2-7b4d68d8f7-mb852 1/1 Running 0 9m6s 172.20.64.21 cn-zhangjiakou.192.168.3.184 <none> <none>

tf-notebook-3-86c48d4c7d-flz7m 1/1 Running 0 20s 172.20.64.22 cn-zhangjiakou.192.168.3.184 <none> <none>

tf-notebook-7cf4575d78-sxmfl 1/1 Running 0 7h49m 172.20.64.14 cn-zhangjiakou.192.168.3.184 <none> <none>

jumper(⎈ |zjk-gpu:default)➜ ~ arena top node -s

NAME IPADDRESS GPU0(Allocated/Total)

cn-zhangjiakou.192.168.3.184 192.168.3.184 12/14

----------------------------------------------------------------------

Allocated/Total GPU Memory In GPUShare Node:

12/14 (GiB) (85%)查看最終結果

如上所示

通過kubectl get pod -o wide 可以看到在cn-zhangjiakou.192.168.3.184 節點上有3個pod運行

通過 arena top node -s 可以看到cn-zhangjiakou.192.168.3.184節點上的顯存資源使用了 12/14

在不同的服務上開啟終端,通過nvidia-smi來查看GPU資源,每個Pod的上限都是4308MiB

在節點cn-zhangjiakou.192.168.3.184 上運行如下命令,查看節點上的資源情況

[root@iZ8vb4lox93w3mhkqmdrgsZ ~]# nvidia-smi

Wed May 27 12:19:25 2020

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 418.87.01 Driver Version: 418.87.01 CUDA Version: 10.1 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 Tesla T4 On | 00000000:00:07.0 Off | 0 |

| N/A 49C P0 29W / 70W | 4019MiB / 15079MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| 0 11563 C /usr/bin/python3 4009MiB |

+-----------------------------------------------------------------------------+由此可以看出通過使用cgpu的模式可以在同一個節點上部署更多的使用GPU資源的Pod,而“普通的調度一個GPU node 只能負載一個pod”

真實的程序

下面是一段可以持續運行使用GPU資源的代碼,其中 參數fraction 為申請顯存佔可用顯存的比例,默認值為0.7,我們在3個pod的Jupyter裡運行下面的程序

import argparse

import tensorflow as tf

FLAGS = None

def train(fraction=1.0):

config = tf.ConfigProto()

config.gpu_options.per_process_gpu_memory_fraction = fraction

a = tf.constant([1.0, 2.0, 3.0, 4.0, 5.0, 6.0], shape=[2, 3], name='a')

b = tf.constant([1.0, 2.0, 3.0, 4.0, 5.0, 6.0], shape=[3, 2], name='b')

c = tf.matmul(a, b)

# Creates a session with log_device_placement set to True.

config = tf.ConfigProto()

config.gpu_options.per_process_gpu_memory_fraction = fraction

sess = tf.Session(config=config)

# Runs the op.

while True:

sess.run(c)

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--total', type=float, default=1000,

help='Total GPU memory.')

parser.add_argument('--allocated', type=float, default=1000,

help='Allocated GPU memory.')

FLAGS, unparsed = parser.parse_known_args()

# fraction = FLAGS.allocated / FLAGS.total * 0.85

fraction = round( FLAGS.allocated * 0.7 / FLAGS.total , 1 )

print(fraction) # fraction 默認值為0.7,該程序最多使用總資源的70%

train(fraction)

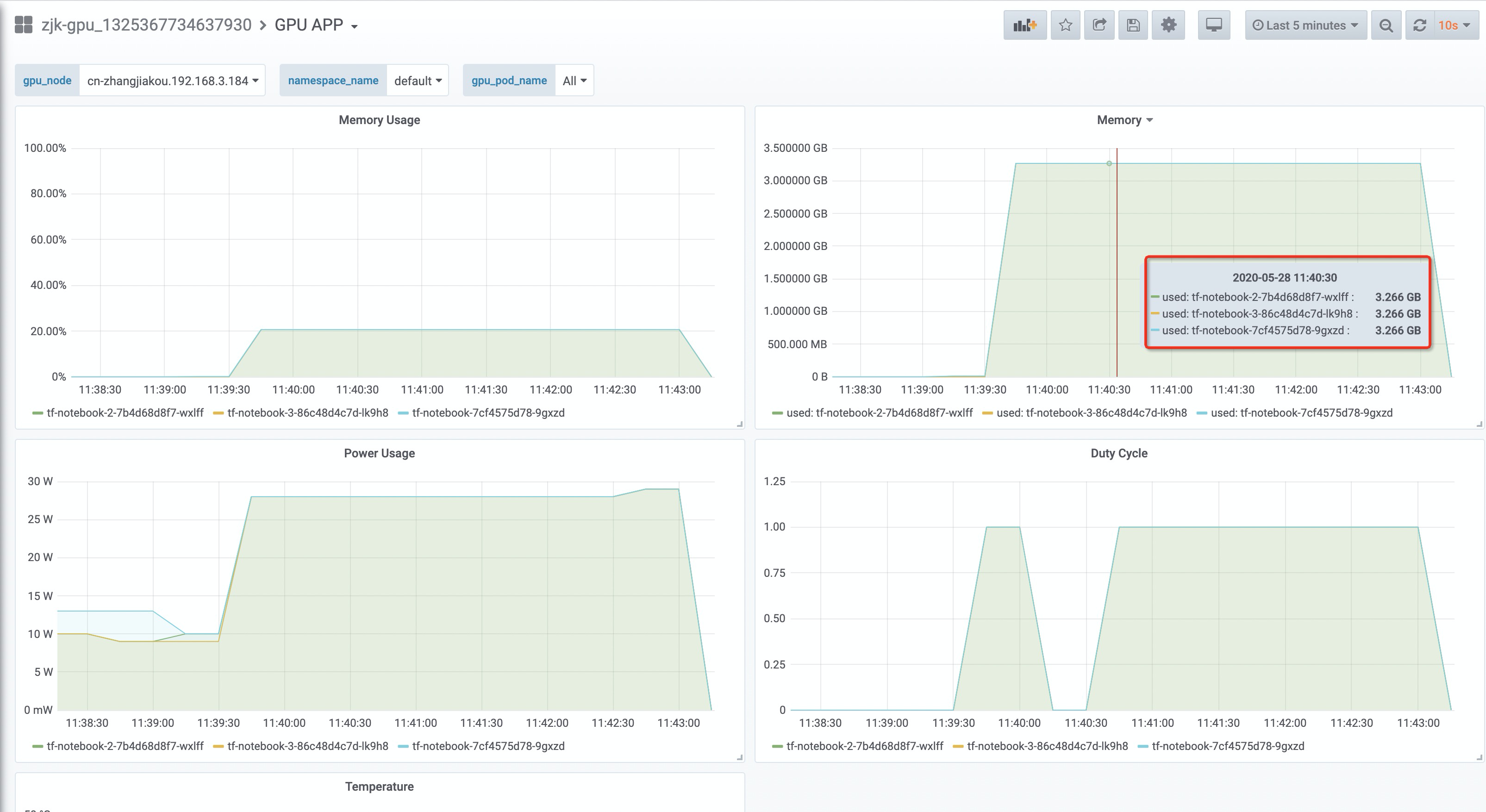

然後通過託管版Prometheus來觀察具體的資源使用情況

如上圖所示,每個Pod實際使用顯存3.266GB,亦即每個Pod的使用的顯存資源都限制到了4

總結

總結一下

- 通過給節點添加cgpu: true標籤將節點設置為GPU共享型節點。

- 在pod中通過 類型

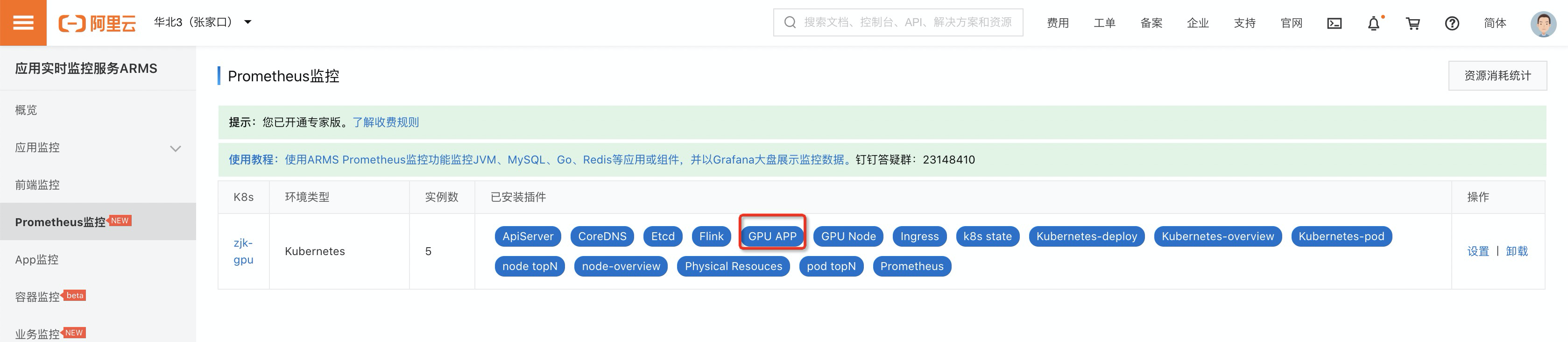

aliyun.com/gpu-mem: 4的資源來申請和限制單個pod使用的資源,進而達到GPU共享的目的,每個pod都可以提供完整的GPU能力; 而Node上的一個GPU資源分享給了3個Pod使用,利用率提升到300% -- 如果資源拆分更小,還可以達到更高的利用率。 - 通過

arena top node、arena top node -s來查看GPU資源分配的情況 - 通過 託管版Prometheus的“GPU APP” 大盤可以看到實際運行時使用的顯存、GPU、溫度、功率等信息。

參考信息

託管版本Prometheus https://help.aliyun.com/document_detail/122123.html

GPU共享方案CGPU https://help.aliyun.com/document_detail/163994.html

arena https://github.com/kubeflow/arena